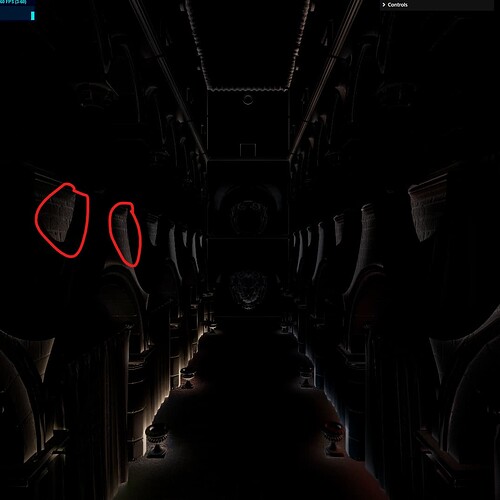

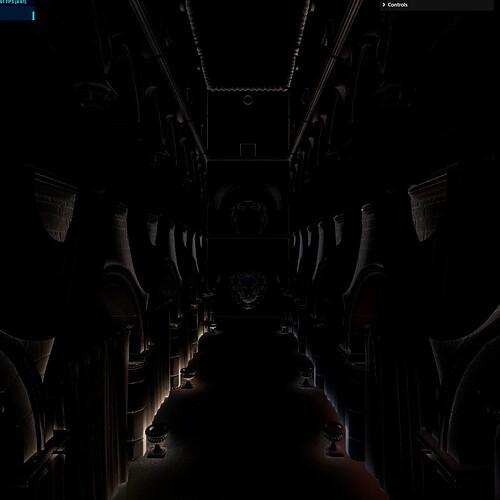

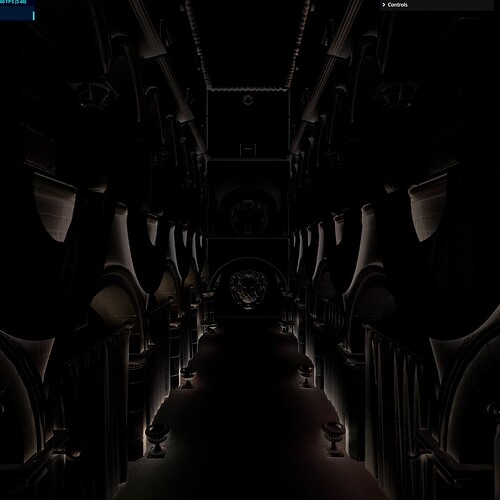

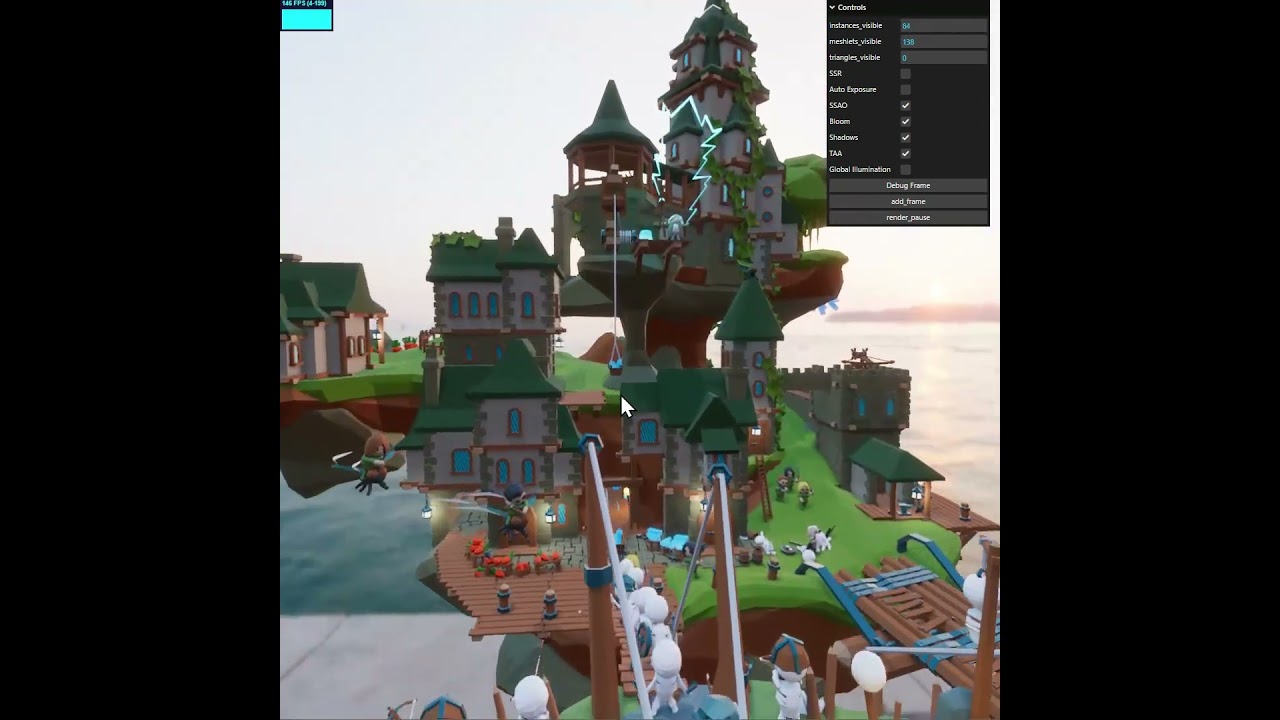

First pictures (taken with Shade)

SSGI: On

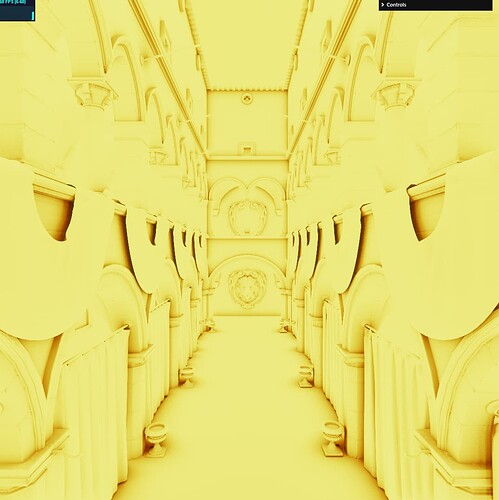

SSGI: Off

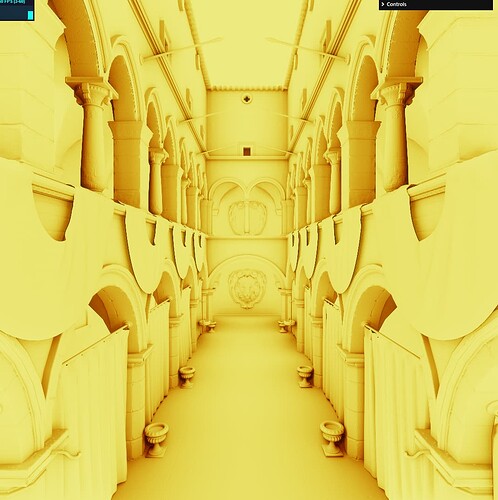

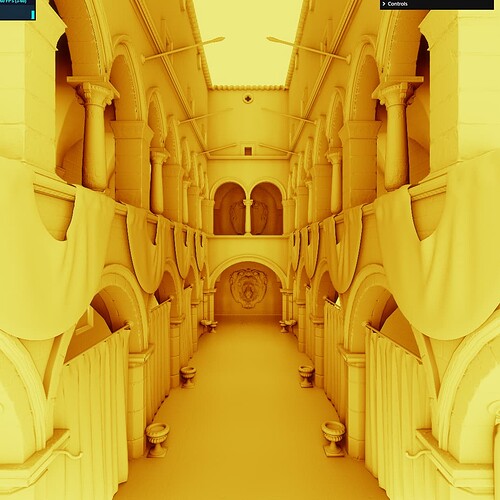

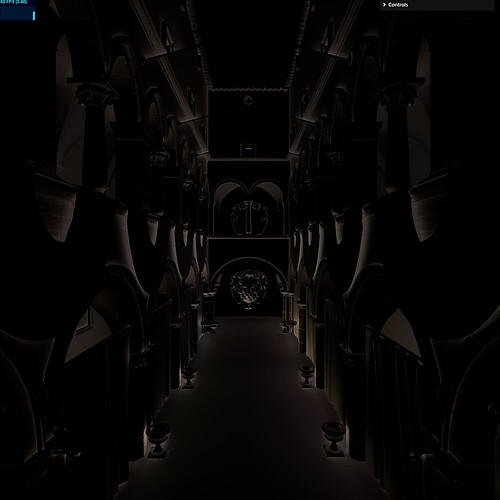

a few close-ups

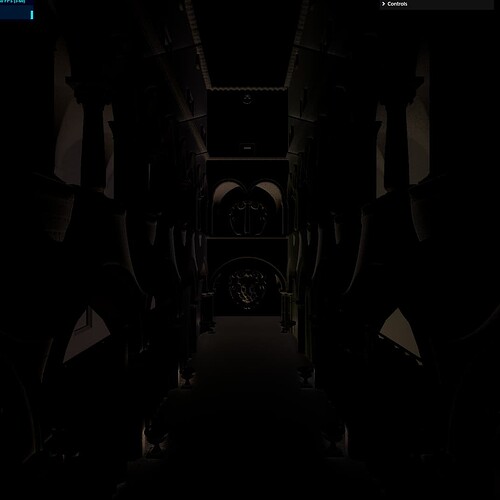

just the diffuse SSGI part

Global illumination is something of a holy grail of 3d graphics. With the advent of RTX (ray tracing hardware / APIs) - this is slowly becoming a practical reality.

However, we’re still not there. Why? Because baked GI takes enormous amount of memory and is mostly static, whereas dynamic GI is very compute heavy.

There are a number of tricks, such as Light Probes, and light maps, as well as various denoising techniques in the stochastic domain.

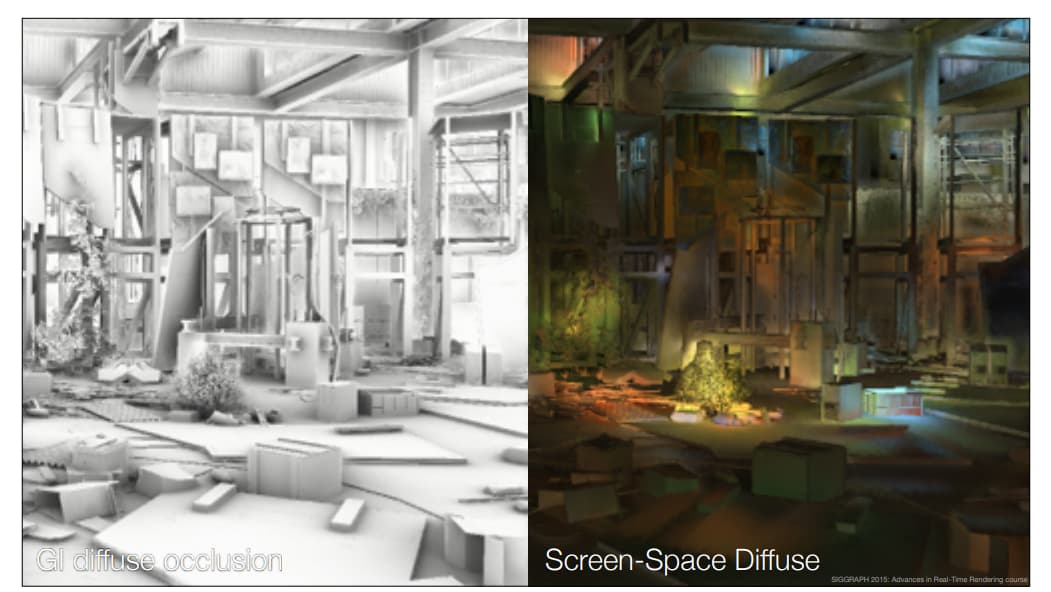

Let’s forget about GI for a moment, before RTX became a reality, when we wanted to get something like GI, we went for screen-space techniques, such as SSAO and SSR.

In recent years, screen-space techniques have seen less interest, but they are still highly desirable, especially because of their predictable performance costs and ease of integration. You don’t need a sophisticated baking tools either, it’s all just post-processing.

So, folks at Unigine came up with a logical extension to that - SSRTGI.

What if we, like, do ray traced GI, but, like, in Screen-Space?..

I remember there was a lot of fuss when @0beqz came out with his realism-effects.

I’ve been curious about the idea ever since reading about how Remedy guys exploited SSAO to get a near-field GI in Quantum Break.

So I figured I’d give it a try as well.

I shared the pictures at the start, but there are a few issues with the whole SSGI concept.

Mainly - SSAO technique is a hack, it’s a good hack for visibility, but not for irradiance. You can’t do a proper gather, the best you can do is integrate arcs during horizon search, but there is no visibility information in there, so in the end you get something that is very leaky.

To remind you, this is the source image:

Here’s what we get if we just gather samples from SSAO as horizons tighten.

If we integrate arcs as we tighten the horizons, we can do much better

But there’s a problem still, here’s just one example

The arch is gathering light that it’s not even supposed to see, as it’s “behind” the curtain.

If we discard surfaces behind and those facing away from the sample, we get this:

This is about the best we can get with SSAO techniques. The issue here is that we have no occlusion information for individual irradiance samples.

The highlighted areas get light from the floor, but looking at the source image it’s obvious that the direction towards the floor is occluded by the curtain

So, can we do better? - Yes, we can. And the clue is the article by Unigine, we have to use screen-space ray tracing. The issue with that - you’re tracing rays now. There is a reason why SSR (screen-space reflections) is an expensive technique, and for diffuse reflections SSR gets exponentially more expensive as rough surfaces scatter rays more, so you get less ray coherence during trace.

The beauty of SSAO-based technique is that it’s relatively inexpensive, as you’re reusing depth and normal samples you already have and only take additional color samples. But the technique is flawed due to incorrect visibility.

That said, as with all screen-space techniques, both ray-traced and AO-based approaches suffer the from the same problem of only being able to see what’s on the screen.

To highlight this issue with the AO-based approach, consider the fact that SSAO should be used for near-field phenomena, basically “small-scale” things. Typically most engines operate in world-space units to keep results visually consistent (detail doesn’t appear/disappear as you move the camera), and it’s typically around 1m distance. Here’s what you get with that:

if we crank it up to 2 meters:

5 meters:

10 meters:

It might look “visually pleasing”, but it’s all kinds of wrong, and incredibly distracting as, unless you’re looking at very big things from far away, AO is gathering things off-screen. I.e. - it’s making stuff up.

Now, let’s do the same thing with the AO radius, but look at the irradiance gathered instead. First, the same 1m again

2 meters:

5 meters:

10 meters:

What I mean to drive home here, is that small-scale irradiance that SSAO can pick up, is truly “small”. I think it’s probably worth it, but only just. If you use a ray-traced technique instead - you’re probably going to get much better results, and for small-scale detail it’s going to be, again, probably just as good, because short distance traces are cheaper and more reliable in screen-space than long distance ones.

I don’t have a satisfying conclusion to all of this, except for my own thoughts and feeling of disappointment. It’s mostly hype at this point. AO is small-scale and inaccurate and tracing rays is expensive.

Disclaimers

- All screenshots are from Shade

- when I talk about “horizons” I refer to HBAO/GTAO concept of a horizon.

- SSAO implementation here is GTAO

- This whole thing relies of a deferred rendering pipeline, specifically you’ll need a depth, normal and color buffers. If you’re going to do physically-based blending, you’ll need a roughness buffer as well.

- Technically, this article only goes into the diffuse part, composited screenshots include SSR as well as an environment map sampled with bent normals, so it’s only a part of the overall system.

References:

- GodComplex/Tests/TestHBIL/2018 Mayaux - Horizon-Based Indirect Lighting (HBIL).pdf at f620f83028f1f131afbac8f2937516296135522e · Patapom/GodComplex · GitHub

- realism-effects/src/ssgi at main · 0beqz/realism-effects · GitHub

- SSRTGI: Toughest Challenge in Real-Time 3D

- https://advances.realtimerendering.com/s2015/SIGGRAPH_2015_Remedy_Notes.pdf