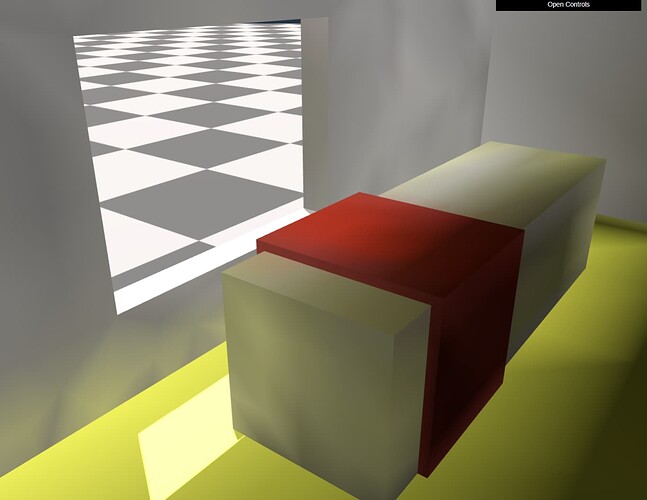

Someone said that a film must start with an explosion. This is not a movie, so here are some pictures instead for motivation:

[source]

[source]

[source]

What are we seeing here? - Global illumination is the short answer.

Global illumination is something we’ve been striving for since long ago.

Light maps

We’ve had light maps for a while now:

[source]

Raytracing

Recently we’ve been sold on a promise of ray tracing with the likes of DirectX Raytracing and alternatives in Vulkan, OpenGL and the like. Much to everyone’s disappointment - that tech didn’t magically give us global illumination, although it did make our lives easier and made it possible to trace a handful of rays per-pixel per-frame.

Screen-space AO

As an honorable mention, around 2007 Vladimir Kajalin of Crytek introduced us to SSAO

[source]

SSAO was so good in fact that it’s staple in pretty much every game released since then and is considered to be a standard feature by now. However, it lacks the “global” part. The shading is only applied from things that are on screen, and worse still - from the G-buffer, meaning that there is no global contribution and it’s little more than a fancy trick that looks quite convincing to the eye. One very important point to highlight here - SSAO then and now works by tracing rays, those rays are traced (well, marched really) in screen-space and not using geometry, but nevertheless - it is raytracing. So that Raytracing that we mentioned earlier? - it’s still helping us speed things up even in areas that most of us don’t think of as being related to Raytracing.

Light Probes

Around 2008-ish times, a new kid on the block appeared - spherical harmonics ![]() ! This is basically a mathematical notation for encoding directional data. We compute how much and what color of light is coming from each direction and record it using just a few floating point numbers, depending on the base of the function - it can be as low as 1 for base-1 function or 9 floats for base 3 function all the way up to infinity.

! This is basically a mathematical notation for encoding directional data. We compute how much and what color of light is coming from each direction and record it using just a few floating point numbers, depending on the base of the function - it can be as low as 1 for base-1 function or 9 floats for base 3 function all the way up to infinity.

Let’s back up a bit for the sake of completeness. If you disregard spherical harmonics - the concept of recording directional light contribution from a point in space is much older than 2008, it dates back all the way to 1976.

So what’s different about “light probes” when compared to “environment maps”? In short - memory usage and sampling complexity.

Details

An environment map is a cube texture (that’s 6 textures for every side of a cube) with some resolution, let’s say something low like 32 x 32 pixels per side, that right there already requires 32 * 32 * 6 = 6144 pixels to be stored. We can drop this to something smaller, like 4x4 pixels per side, which nets us 96 pixels, but at that point there is hardly any directional data in there, and you lose a lot of directional resolution further away from the edges of the cube.

How does this compare to base-3 spherical harmonics (from here on referred to as sh3)? They require just 9 numbers to encode. For comparison, the cube map would need to be 1x1 to beat that, the next best thing you can do is 2x2 cubemap, that would already take 24 numbers, that’s 2.6x more than sh3.

Now to actually sample the data from each, for cubemap there is potentially a win at large sizes as you can analytically figure out what pixel(s) to read and just read that, whereas for spherical harmonics you need to evaluate the entire function with all parameters. So as the fidelity gets higher and higher, cubemaps tend to win in terms of amount of compute you have to do. What about lower sizes? Well, if you have a cubemap that’s 2x2 - you would need to read at least 4 pixels generally for linear sampling to work, beyond that I’m not sure what the hardware does, but I’m guessing there are a number of dot products happening under the hood (multiplications + additions) to project the direction vector onto each side to figure out which pixels to sample. Then the actual linear interpolation has a number of multiplications and additions. Compared to that sh3 has around 15 mandatory adds and just 10 additions, regardless of direction being sampled and there are no branches.

So here we are, light probes. In modern engines and applications - we expect to be able to use 100s if not 1000s of probes. Here’s a screenshot from Infamous with and without light probes:

For reference, in the same game they used ~468,000 light probes for the whole world with ~18k-56k per “block” (world tile). The entire world data took up ~25Mb of storage raw. That’s a lot of probes for even back then. In their own words, if they used light maps, it would have taken ~12Mb per block just for the UVs alone, not taking textures into account. Needless to say that’s a huge saving in space.

The Thing

Finally, we’re getting close to the main point. Light probes offer a very cheap and compact solution to global illumination. Beyond that - they offer a solution that extends into 3 dimensions. Where light maps only record light on the surface, light probes record data in 3d space and can be used to apply lighting in real-time. If you imagine that market scene above and imagine a guy running through that market, as he dips in and out of the shaded areas - he would automatically get shaded darker or lighter from light probes there. This is not something you can get from light maps at all.

So here I was, having read a bunch of papers about a bunch of awesome games made by awesome people, and I thought to myself… You can do it too, how hard can it be? I can even understand most of the word in those papers and presentations - that’s practically 90% there, all that’s left is to write a couple of lines of code and boom!

Well, it turns out I was just a little bit optimistic, it took me a better part of the year working in spare time to get tetrahedral light probe meshes working.

Here are a couple of screenshots from my testing environment:

(view from the bottom, so you can see the colored texture on top to give some nice color variation)

A few more probes

Random distribution

and just to show that we can handle a fair number of probes, here are 100,000 randomly placed (the house is somewhere in there, trust me, trust me):

The key thing that took me all this time has been tetrahedral mesh generation. It’s the thing that creates little pyramids connecting 4 nearby probes together. I was a bit surprised when I couldn’t find tetrahedral mesh generation libraries for JS, and I was disappointed when I tried to port existing libraries like Tetgen and cgal to JS as they really don’t map well to JS. Trying to wrap them in WASM produced a lot of overhead at the interface level and added a few Mb to the size of the engine. In the end, the generator I wrote is state-of-the-art, quite small (around 16Kb of uncompressed JS) and is pretty snappy, the 100,000 point mesh was generated in 2s. The generator is incremental as well, which means that it lets me add/remove points without having to rebuild the entire mesh, so editing light probe mesh at runtime becomes possible. All that is to say I’m pleased with what I ended up with.

Numbers

Tetrahedral mesh (100,000 points, 669340 tets) build took 2057.6ms

Baked 100,000 probes in 294668ms, ~2.95ms per probe

Here’s sponza:

Summary

Tetrahedral mesh (10,080 points, 52080 tets) build took 507.3ms

Baked 10,080 probes in 59210.4ms, ~5.87ms per probe

Where are we now then? Well, I think that the probes the way they are baked by me now are a big buggy, and I’d like to move the whole baking process over to raytracing so I can get more than 1 light bounce as well as to be able to do baking inside workers. I haven’t done any work on actually using the probes either. The idea is to sample the tetrahedral mesh per-vertex at least, so we can get very smooth shading transitions across the mesh that sample more than just 4 probes per mesh. Then there are various tricks to do with visibility computations, to prevent us from sampling probes that are, say, behind a wall and should not be contributing any light. I’d also like to explore a solution for automatic probe placement. The whole point of building tetrahedral meshes from arbitrary set of points is to be able to sparsely populate the scene with probes, placing more probes around color/light transition boundaries, such as edges of shadows, various sharp corners of large meshes and stark texture color transitions. That’s a whole another story though.

Hope you found this interesting!

Credits

- I found @donmccurdy 's work incredibly useful as a base for light probe stuff.

- Célestin Marot, he was kind enough to walk me through some of the confusing parts of tetrahedral mesh generation which helped a ton.

- Michał Iwanicki and Peter-Pike Sloan for the incredible detail they provided in their various presentations as well as all of the supplementary material they published on the web.