Hey guys, I’ve been working on a level generator for my meep-based new game for the past month or so and I thought I would share some results so far.

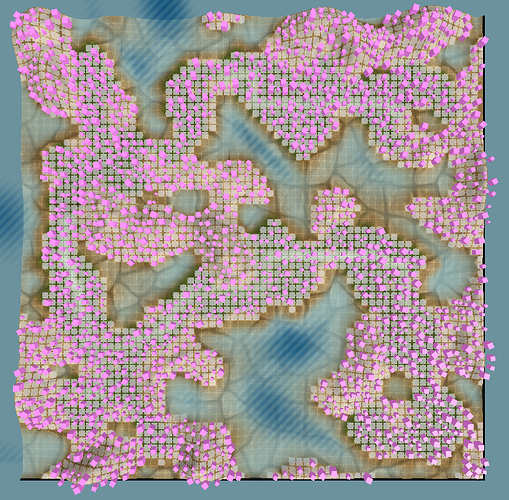

Here’s a small snippet, breaking down placement of trees. What you see on the following screenshot is landscape with pink boxes marking areas where trees will be placed. The goal was to place trees in such a way as to appear natural as well as respecting various gameplay constraints.

As you can see the level has many different features such as different altitudes, water, gameplay objects.

The generator that I wrote works on a very simple concept, it will place objects in 2d space using some kind of density field. So the tree placement, all that is required is to describe a density field of where the trees should go.

I have a few restrictions that I want to implement:

-

A tree shall not be placed on the playable area, where the player can walk and interact with things. This is denoted with the grassy texture on the screenshot.

-

A tree shall not be placed on the water.

-

A tree shall not be placed on steep slopes.

Beyond that I want the trees to have a natural look, some areas of the map should have more densely forested areas than others.

To achieve the final result I created a density field for each restriction, as well a noise-based density field to provide that natural look. Here are the individual fields being visualized one by one:

Exclusion of playable areas:

Exclusion of areas that are below the water level:

Exclusion of areas with sharp slopes

For that natural look, the simplex noise function

The final result is just a multiplication of these densities:

I have initially considered implementing vegetation growth simulation to distribute the foliage (such as trees), but in the end I decided that “realism” was not necessary for the kind of game I am making, and I don’t have too many types of trees to juggle.

However, this approach does support some elements of additional realism, such as having a temperature or precipitation layer and sampling those to compute additional density fields.

One more point to make, this generation uses density as a measure of how much areas should be covered by the objects. So with density of 1 the generator will attempt to pack objects in the areas as tightly as it can, whereas with density of 0.1 it will try to keep only about 10% of the total area occupied.

To facilitate this, objects provide a simple 2d radius, which is used to calculate occupied area. Here’s a version of above above generation process with tree sizes scaled down 50%

You will notice that a lot more trees are being placed, as the generator is trying to achieve the same overall coverage density.

I plan to eventually release this generator as part of a future meep update, but there is no ETA on this for now.

If anyone is curious, the entire generation process for this 64x64 map takes about 1-2 seconds.

For this 128x128 map it’s about 4s:

that’s all folks, let me know what you think

I’m also curious if anyone else has some experience with this kind of thing, what kind of approaches you use for foliage placement.

) :

) :

if

if then

then