Spent more time on the problem, learned a lot more about optics and participating media.

The most interesting thing is - I split the code into 3 distinct passes:

- Participating Media integration

- Light integration

- Final gather

It’s the same as what guys from Frostbite proposed

In the first pass we take user-defined volumes and resample them into a froxel grid. This allows us to defined 100s or 1000s of distinct particle volumes on the scene without a significant performance overhead.

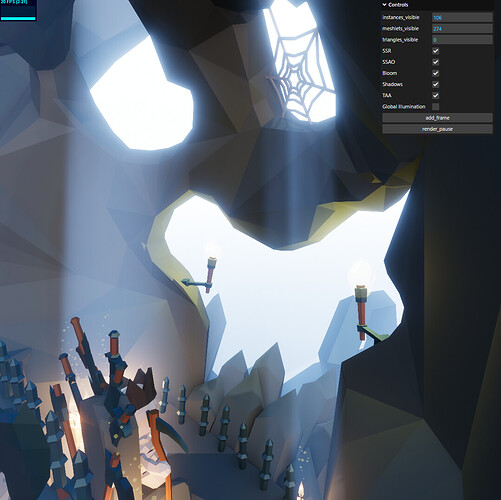

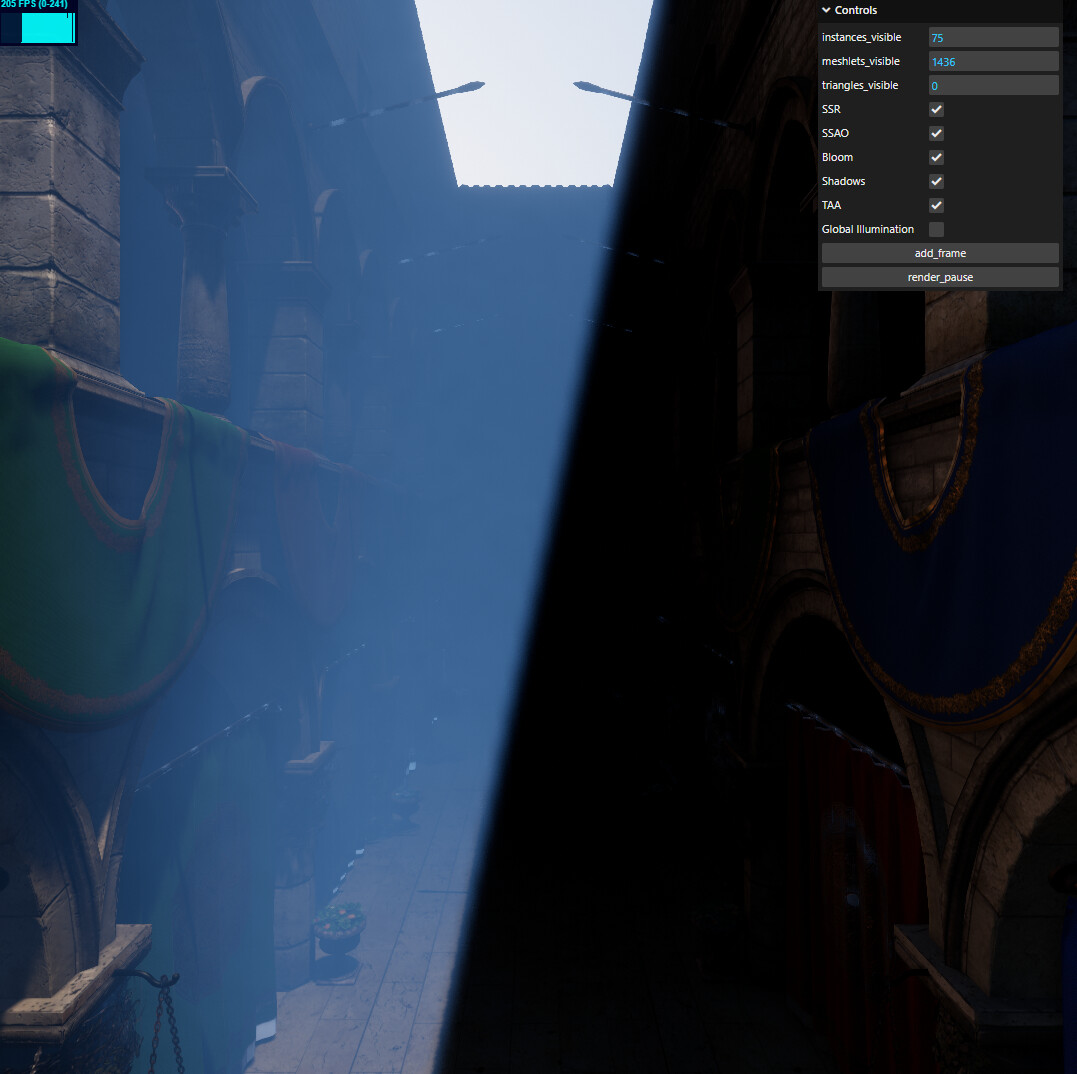

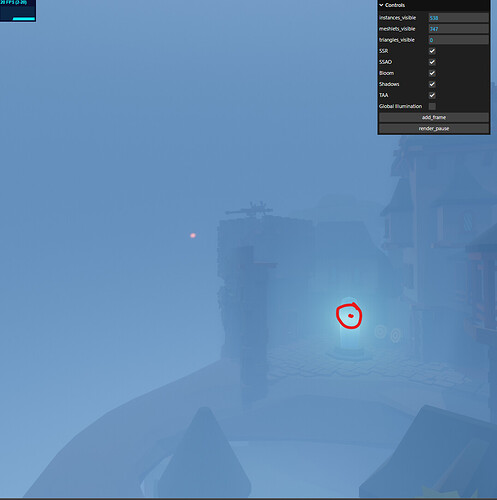

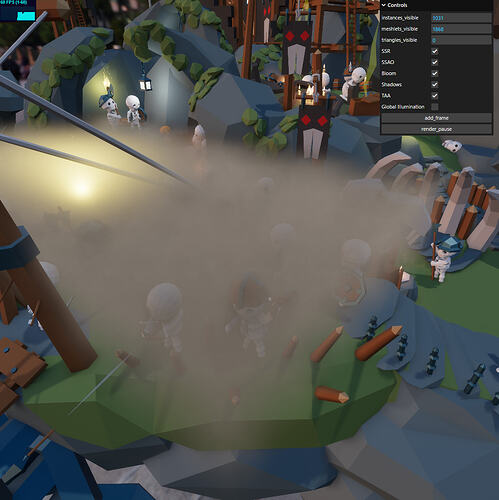

The second pass calculates in-scattering of all lights in the scene. I’ve added a multiscattering approximation using Sony’s Magnus Wrenninge approximation (see “Oz: The Great and Volumetric”). Another important piece here is - I integrate optical depth for each light, meaning that lights correctly dim with distance. You can see it on the screenshot where the torch on the left of the screen gets sharply attenuated down the further the light has to travel through the volume

The final gather is fairly simple, we just march through the volume back to front and accumulate visible light and extinction. The only complicated thing I do here is using polynomial curve approximation for integration, instead of doing the standard Riemann sum. This is something Frostbite presentation also highlights:

You can see here multiple volumes with increasing scattering properties. It is easy to understand that integrating scattering and then transmittance is not energy conservative.

We could reverse the order of operations. You can see that we get somewhat get back the correct albedo one would expect but it is overall too dark and temporally integrating that is definitely not helping here.

So how to improve this? We know we have one light and one extinction sample.

We can keep the light sample: it is expensive to evaluate and good enough to assume it constant on along the view ray inside each depth slice.

But the single transmittance is completely wrong. The transmittance should in fact be 0 at the near interface of the depth layer and exp(-mu_t d) at the far interface of the depth slice of width d.

What we do to solve this is integrate scattered light analytically according to the transmittance in each point on the view ray range within the slice. One can easily find that the analytical integration of constant scattered light over a definite range according to one extinction sample can be reduced this equation.

Using this, we finally get consistent lighting result for scattering and this with respect to our single extinction sample (as you can see on the bottom picture).

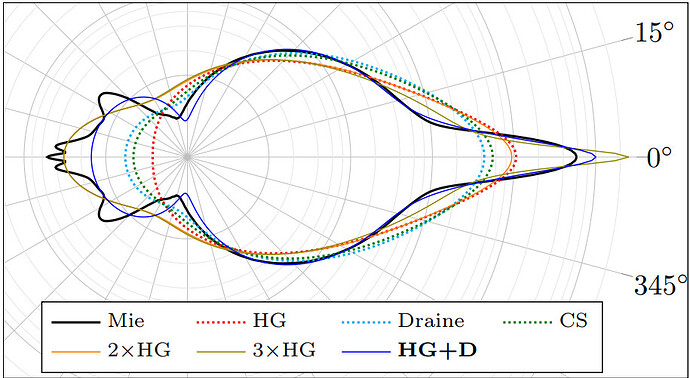

While I was trying to wrap my head around the physics part of this, I ended up writing a Mie simulator in JS based on SIGGRAPH paper from 2007 “Computing the Scattering Properties of Participating Media Using Lorenz-Mie Theory”. I wanted a system that doesn’t just work for smoke, or just for fog, or just for clouds, but for all types of participating media. Incidentally, here’s a table I generated, feel free to use it, I would appreciate attribution:

/**

* 📚 Precomputed standard atmospheric particle library for rendering.

*

* This file is GENERATED. Do not edit by hand.

* Generation settings: 380–780 nm, step_size=1nm, xyz CMFs, medium=air. Integrated to sRGB for D65 illuminant

*

* Each entry contains:

* - radius: Particle radius in meters.

* - cross_section_scattering: [R, G, B] scattering coefficients (m²).

* - cross_section_extinction: [R, G, B] extinction coefficients (m²).

* - g: Asymmetry parameter (average cosine of the scattering angle).

*/

export const MIE_PARTICLES_STANDARD_PRECOMPUTED = {

// --- 💧 Water-Based (Haze, Fog, Clouds) ---

/**

* Continental haze (ammonium sulfate surrogate)

* SMALL — faint land/city haze: distant skyline slightly washed out on a sunny day.

* Diameter: 100 nm

*/

CONTINENTAL_HAZE_SMALL: {

radius: 5.0e-8,

cross_section_scattering: [1.0372407599145680e-16, 2.1697944076390811e-16, 4.9834805745060257e-16],

cross_section_extinction: [1.0390516205855430e-16, 2.1717567420831002e-16, 4.9879159253139388e-16],

g: 0.06687111747972882,

},

/**

* MEDIUM — typical daytime urban/valley haze with gentle desaturation.

* Diameter: 500 nm

*/

CONTINENTAL_HAZE_MEDIUM: {

radius: 2.5e-7,

cross_section_scattering: [5.4565579877961842e-13, 6.8621977746322113e-13, 8.2869951608010304e-13],

cross_section_extinction: [5.4570905150853122e-13, 6.8627271753511908e-13, 8.2881953884176050e-13],

g: 0.72195208606467531,

},

/**

* LARGE — thicker land haze; think post‑inversion murk softening hills.

* Diameter: 1.0 µm

*/

CONTINENTAL_HAZE_LARGE: {

radius: 5.0e-7,

cross_section_scattering: [3.0366185612989802e-12, 2.1644690526988712e-12, 1.3935109366522711e-12],

cross_section_extinction: [3.0370652035911430e-12, 2.1649491115406566e-12, 1.3945337813123169e-12],

g: 0.6137288243405209,

},

/**

* Maritime haze (sea‑salt/brine)

* SMALL — light coastal humidity haze over the ocean.

* Diameter: 100 nm

*/

MARITIME_HAZE_SMALL: {

radius: 5.0e-8,

cross_section_scattering: [5.5914464124496594e-17, 1.1568131266404890e-16, 2.6209632958954776e-16],

cross_section_extinction: [5.5915758040155253e-17, 1.1568241782228503e-16, 2.6209808791233174e-16],

g: 0.062667411576877274,

},

/**

* MEDIUM — bright, milky air on a breezy beach or around harbors.

* Diameter: 500 nm

*/

MARITIME_HAZE_MEDIUM: {

radius: 2.5e-7,

cross_section_scattering: [2.7132966283888992e-13, 4.0042270998895428e-13, 5.5420120025016184e-13],

cross_section_extinction: [2.7132992896636519e-13, 4.0042294960829909e-13, 5.5420156922320274e-13],

g: 0.75572608442123446,

},

/**

* LARGE — thick marine layer look before it becomes fog.

* Diameter: 1.0 µm

*/

MARITIME_HAZE_LARGE: {

radius: 5.0e-7,

cross_section_scattering: [3.0886970942611371e-12, 3.1506283070098210e-12, 2.6753836337037947e-12],

cross_section_extinction: [3.0886995489983882e-12, 3.1506303784552175e-12, 2.6753868296970645e-12],

g: 0.82009537287886958,

},

/**

* Fog & Cloud Droplets

* SMALL — light mist: dawn over a lake, waterfall spray, breath fog.

* Diameter: 2.0 µm

*/

FOG_DROPLET_SMALL: {

radius: 1.0e-6,

cross_section_scattering: [6.6872146821004324e-12, 5.4309369486615811e-12, 7.5571588161013023e-12],

cross_section_extinction: [6.6872171928267207e-12, 5.4309373592604994e-12, 7.5571591319672130e-12],

g: 0.66286908969703728,

},

/**

* MEDIUM — typical road fog reducing visibility to a few hundred meters.

* Diameter: 10.0 µm

*/

FOG_DROPLET_MEDIUM: {

radius: 5.0e-6,

cross_section_scattering: [1.7036472789376784e-10, 1.6665060947239930e-10, 1.6466175909414485e-10],

cross_section_extinction: [1.7036520771027743e-10, 1.6665065143578210e-10, 1.6466177643977473e-10],

g: 0.85263072331984047,

},

/**

* LARGE — bright, thick cloud core or dense sea fog.

* Diameter: 20.0 µm

*/

CLOUD_DROPLET_LARGE: {

radius: 1.0e-5,

cross_section_scattering: [6.5315742448115536e-10, 6.5618165104565060e-10, 6.5250478893917499e-10],

cross_section_extinction: [6.5315858886811270e-10, 6.5618181350359152e-10, 6.5250489005400894e-10],

g: 0.86080713114012297,

},

// --- 🔥 Combustion (Smoke & Soot) ---

/**

* Biomass Smoke (e.g., Wood, Wildfire) — "Brown Carbon"

* SMALL — fresh wood‑smoke right above the flames.

* Diameter: 400 nm

*/

SMOKE_PARTICLE_SMALL: {

radius: 2.0e-7,

cross_section_scattering: [2.5095238381331490e-13, 3.2817527241713552e-13, 4.7184181688431561e-13],

cross_section_extinction: [2.5244957121744367e-13, 3.3073489397071378e-13, 4.7766204956433065e-13],

g: 0.64153537730937171,

},

/**

* MEDIUM — typical wildfire/chimney smoke drifting across a valley.

* Diameter: 800 nm

*/

SMOKE_PARTICLE_MEDIUM: {

radius: 4.0e-7,

cross_section_scattering: [2.2186086454447846e-12, 1.8611290477348588e-12, 1.2017961650839806e-12],

cross_section_extinction: [2.2347400788139265e-12, 1.8849701372888018e-12, 1.2529531542589939e-12],

g: 0.68088789083915369,

},

/**

* LARGE — aged regional smoke layers that turn the sun orange.

* Diameter: 2.0 µm

*/

SMOKE_PARTICLE_LARGE: {

radius: 1.0e-6,

cross_section_scattering: [9.7154096631596466e-12, 6.5695017319655712e-12, 6.5950874641241054e-12],

cross_section_extinction: [9.9744536671262377e-12, 6.9383549994562171e-12, 7.2416530016257137e-12],

g: 0.73891619526845487,

},

/**

* Soot (e.g., Diesel Exhaust) — "Black Carbon"

* SMALL — very dark sooty exhaust: candle wick zone or fresh tailpipe soot.

* Diameter: 50 nm

*/

SOOT_PARTICLE_SMALL: {

radius: 2.5e-8,

cross_section_scattering: [4.9471331742851396e-18, 1.1425655543629920e-17, 3.3950349242847612e-17],

cross_section_extinction: [4.9469989827650098e-16, 6.4148352307268963e-16, 9.4380272697116261e-16],

g: 0.018039326863221513,

},

/**

* MEDIUM — traffic/industrial pollution haze mixing into city air.

* Diameter: 200 nm

*/

SOOT_AGGREGATE_MEDIUM: {

radius: 1.0e-7,

cross_section_scattering: [1.6352855504532771e-14, 2.6526196915106281e-14, 3.7046081158626368e-14],

cross_section_extinction: [5.6514531230912217e-14, 7.3826992547192368e-14, 8.8338524712659901e-14],

g: 0.31674236124633798,

},

/**

* LARGE — heavy dirty smoke near source: burning oil/tires.

* Diameter: 400 nm

*/

SOOT_AGGREGATE_LARGE: {

radius: 2.0e-7,

cross_section_scattering: [1.6063607357301153e-13, 1.6542541029497725e-13, 1.6709682614452953e-13],

cross_section_extinction: [3.6165255982805808e-13, 3.6212311121035904e-13, 3.5517926834511851e-13],

g: 0.69133198032115784,

},

// --- 💨 Solid Particulates (Dust & Pollen) ---

/**

* Mineral Dust (e.g., Desert, Sand)

* SMALL — far‑range dusty air softening distant mountains.

* Diameter: 1.5 µm

*/

FINE_DUST_SMALL: {

radius: 7.5e-7,

cross_section_scattering: [2.6570379687422880e-12, 3.6996919661206611e-12, 4.5471939176491137e-12],

cross_section_extinction: [2.8819492220384537e-12, 4.1008076519912007e-12, 5.1659038204372376e-12],

g: 0.63886827482281139,

},

/**

* MEDIUM — moving dust clouds from vehicles or field winds.

* Diameter: 5.0 µm

*/

COARSE_DUST_MEDIUM: {

radius: 2.5e-6,

cross_section_scattering: [3.5472196264524937e-11, 3.3430210987054714e-11, 3.2075451113920786e-11],

cross_section_extinction: [4.2441528450403185e-11, 4.3597399152097500e-11, 4.3350184971955535e-11],

g: 0.84075100028702532,

},

/**

* LARGE — sandstorm wall: near‑camera blowing sand/tan curtains.

* Diameter: 15.0 µm

*/

COARSE_DUST_LARGE: {

radius: 7.5e-6,

cross_section_scattering: [3.1816278385853973e-10, 2.9406557017587955e-10, 2.6643041098315311e-10],

cross_section_extinction: [3.7355130308305938e-10, 3.7144494812949936e-10, 3.6814067045857220e-10],

g: 0.85247658559411998,

},

/**

* Pollen (Organic)

* MEDIUM — seasonal pollen haze; yellow‑green tint in spring air.

* Diameter: 20.0 µm

*/

POLLEN_PARTICLE_MEDIUM: {

radius: 1.0e-5,

cross_section_scattering: [6.3652345959538284e-10, 6.2647624730458445e-10, 5.8718713214140584e-10],

cross_section_extinction: [6.5918545142090177e-10, 6.5700023143709686e-10, 6.5127465699910976e-10],

g: 0.81082270359712194,

},

/**

* LARGE — visible puffs from trees (e.g., pine) or catkins in forests.

* Diameter: 30.0 µm

*/

POLLEN_PARTICLE_LARGE: {

radius: 1.5e-5,

cross_section_scattering: [1.3961132818036018e-9, 1.3739693858138355e-9, 1.2750015299393954e-9],

cross_section_extinction: [1.4562652589727905e-9, 1.4596101004954230e-9, 1.4523332353409636e-9],

g: 0.81884345812519288,

},

};

The table is generated for D65 luminant, in linear sRGB using 1nm spectral sweep from 380nm to 780nm, so it’s radiometrically 100% accurate. The refraction index for each type of media was pulled from published tables from Applied Optics mostly.

What this means in practice, is we can create volumes like so:

const fog = new ParticipatingMediaVolume();

fog.transform.position.set(-55, 4, -5.116);

fog.transform.scale.set(10, 3, 20); // 10m by 3m by 20m

fog.transform.rotation.fromAxisAngle(Vector3.up, 83 * (Math.PI / 180));

fog.fade_distance = 0.1; // fade density of the volume to 0 over the distance of 10cm at the edge of the volume

fog.particle_spec = VolumetricsParticleSpec.fromMeep(MIE_PARTICLES_STANDARD_PRECOMPUTED.CLOUD_DROPLET_LARGE);

fog.density = 1527046979; // N, number of particles per cubic meter. Yes there are a LOT of water droplets in dense fog :D

scene.volumetrics.add(fog);

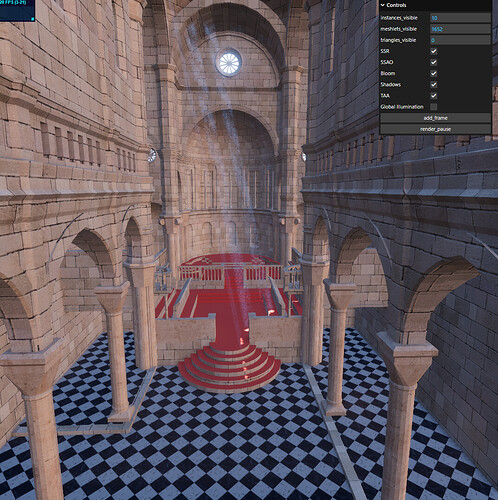

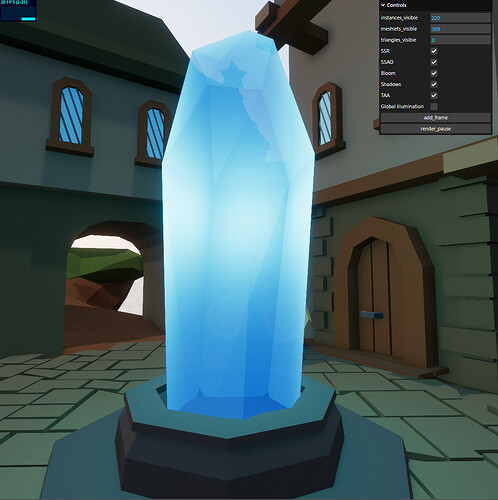

And you get a result like this:

If we set the particle specification to something else, visual appearance changes quite obviously:

COARSE_DUST_MEDIUM

MARITIME_HAZE_MEDIUM

SMOKE_PARTICLE_LARGE

SOOT_AGGREGATE_MEDIUM

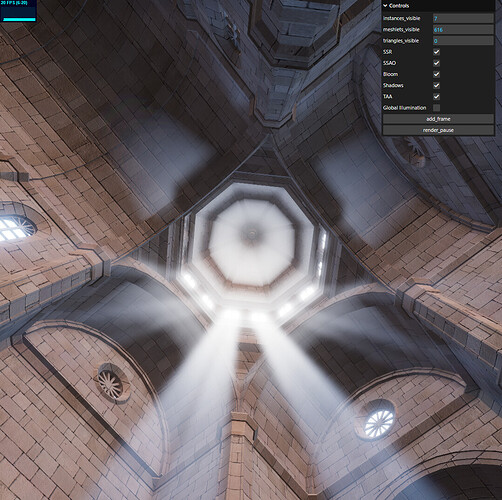

You’ll notice difference absorption/scattering behavior. Some types of media scatter more light, some absorb more, some scatter more light forward, some scatter light uniformly. These behaviors are also spectrum-dependent, meaning light changes color. If you look over the screenshots above, you’ll notice that the same yellow torch shifts color quite drastically in different types of volume, and at different depth.

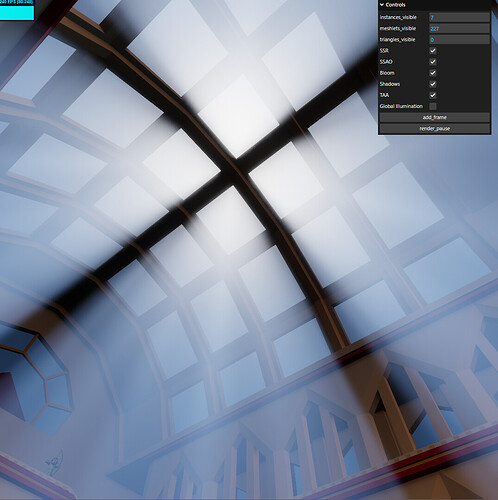

And if we get up close, we can see that the light shafts are still there

And you can mix volumes quite naturally as well

We have a volume of dense smoke cross over a light volume of fog

here’s what that looks like inside