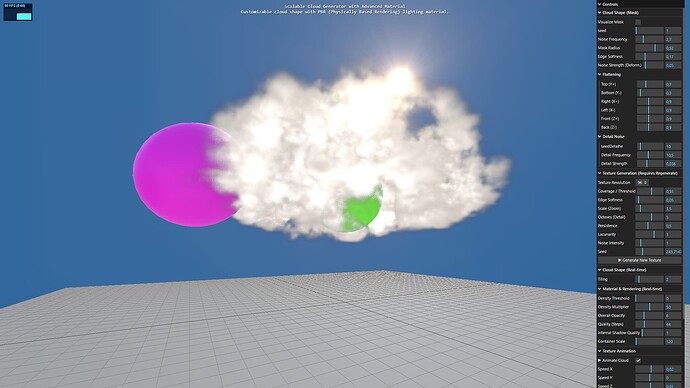

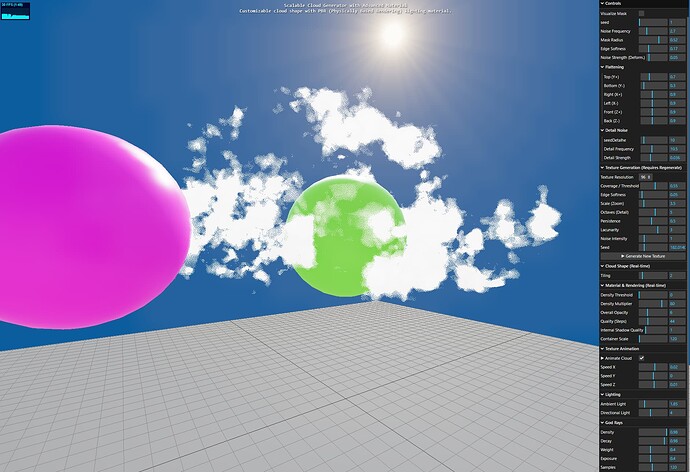

UPDATE: I noticed that in my previous models, the sunlight penetrated the clouds more (see image). I spent a lot of time trying to figure out what was wrong, discussing it with the AI, thinking it was something wrong with the cloud shader. In the end, I discovered that the tone mapping was cutting off the Sun’s lighting effect. So, I updated the code on GitHub: I simply commented out the lines below and adjusted the initial parameters:

// renderer.toneMapping = THREE.ACESFilmicToneMapping;

// renderer.toneMappingExposure = 1.0;

RECOMMENDATION: Since I’m not a programmer, I didn’t properly review the code. My judgment is purely visual: if it looks good visually, I approve it and move forward with development. I recommend reviewing the code because I’m not entirely convinced that these cloud visual effects are faithful to professional standards. The code I presented to the AI to create my program is shown below:

Hello, fellow Three.js developers!

A fully procedural and scalable volumetric cloud generator, built as a single, self-contained HTML file.

This project was developed with the help of AI as a way for me to dive deep into GLSL, ray marching, and advanced rendering techniques. I’m sharing it as a free resource for anyone who wants to learn from it, experiment with it, or adapt the techniques for their own projects.

Live Demo & Source Code

-

Live Demo: https://leoawen.github.io/volumetric-clouds/

Live Demo: https://leoawen.github.io/volumetric-clouds/ -

GitHub Repo: https://github.com/leoawen/volumetric-clouds

GitHub Repo: https://github.com/leoawen/volumetric-clouds

Key Features

-

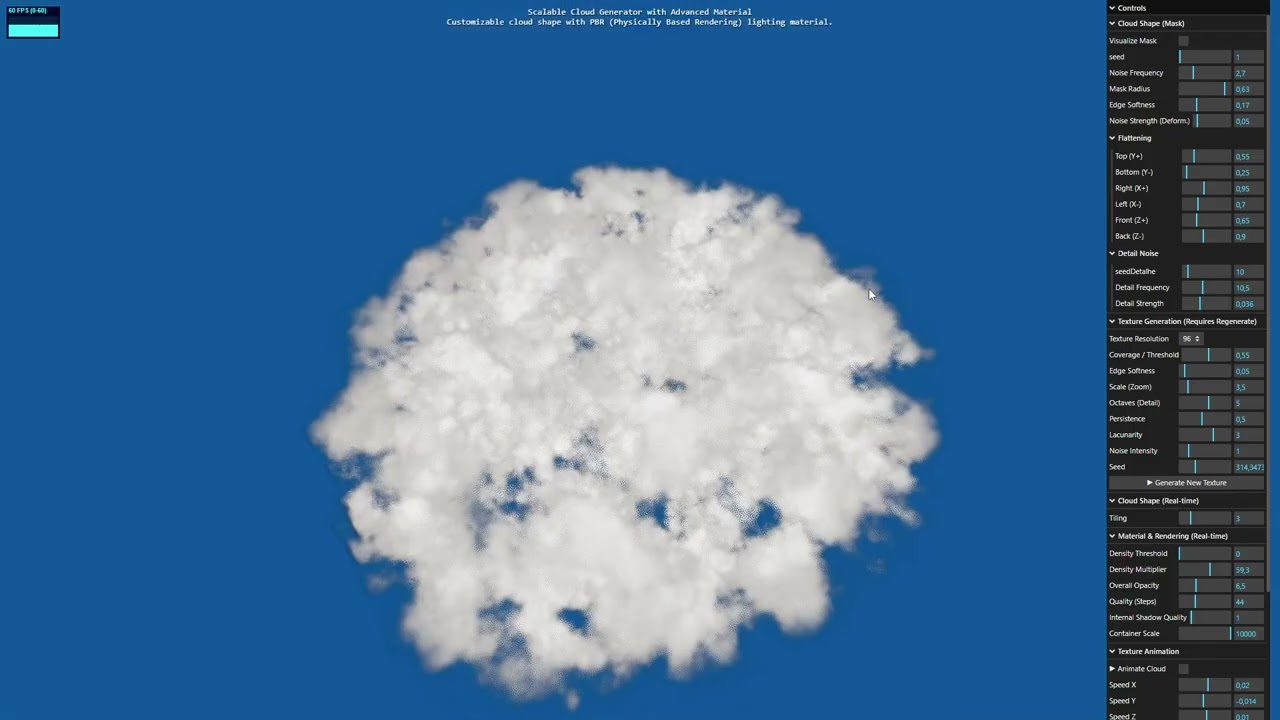

Procedural 3D Texture Baking: The core cloud density is generated using FBM noise and baked into a 3D texture on the fly. This pre-calculation is key for achieving real-time performance. You can regenerate new cloud shapes directly from the GUI.

-

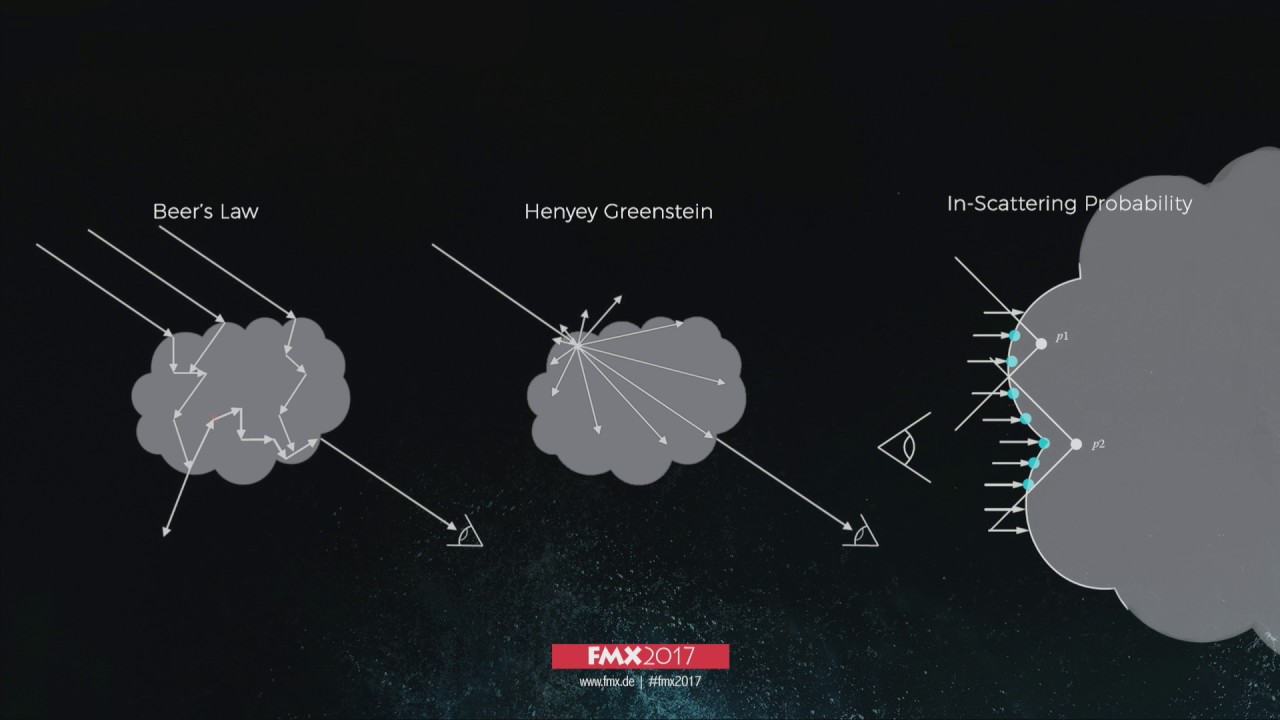

Volumetric Ray Marching: The cloud is rendered using a ray marching algorithm inside a GLSL shader, simulating light scattering and absorption for a realistic volumetric effect.

-

Customizable Cloud Shape: The overall cloud shape is defined by a deformable spherical mask with two layers of noise. All parameters (flattening, radius, noise strength, etc.) are controllable via the lil-gui panel.

-

Scene Integration & Occlusion: The clouds correctly interact with other solid objects in the scene. A depth pre-pass ensures that the ray marching stops when it hits a solid object, allowing you to fly through and around the cloud realistically.

-

Post-Processing God Rays: Includes a multi-pass post-processing effect to generate crepuscular rays (God Rays) that are realistically occluded by both solid objects and the dense parts of the cloud itself.

-

Self-Contained & Commented: The entire application runs from a single .html file with no external dependencies besides Three.js from a CDN. The code, especially the GLSL shader, is heavily commented in English to explain the process.

How It Works: The Rendering Pipeline

The final image is created through a series of rendering passes:

-

Depth Pre-Pass: Renders only the solid objects (spheres, ground) to a depth texture.

-

God Rays Occlusion Pass: Renders a black-and-white mask. The sun is white, and anything that blocks light (solid objects and the cloud volume) is rendered in black.

-

God Rays Generation: A post-processing shader reads the occlusion mask and generates the light shafts.

-

Main Scene Pass: The solid objects and the volumetric cloud are rendered. The cloud’s ray marching shader reads the depth texture from Step 1 to handle occlusion correctly.

-

Final Composite: The God Rays texture is additively blended on top of the main scene render.

This project is for anyone interested in learning more about GLSL shaders, volumetric effects, or advanced rendering pipelines in Three.js.

I hope you find this resource useful! All feedback, questions, and suggestions are welcome.

Happy coding!

– Leonardo Soares Gonçalves

References: