Overlapping or intersecting transparent objects are some of the most problematic things to render in graphics. Solutions like alpha hash or dithered rendering, weighted-blended transparency, and other solutions can be fast but have their own visual artifacts to be aware of that may make them undesirable.

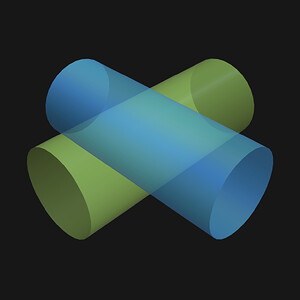

Depth peeling, on the other hand, can be fairly performance intensive but results in correct transparency overlap, even for intersecting objects. Here are a few examples from a demo I wrote last year:

This works by progressively rendering each subsequent overlapping layer of the transparent objects and compositing them together - hence referring to it as “peeling”. Here’s another demonstration on a more complex model with multiple layers of geometry, toggling between the depth peeling approach, typical transparency rendering, and fading the opacity. You can see that the model fades smoothly to a “full opaque” model with no visual popping or artifacts:

For detailed models like architectural or CAD models that use different kinds of draw-throughs, this type of correct transparency can improve clarity of the result.

One quirk is that you need to specify the number of “layers” to render ahead of time, which can have a noticeable impact performance if there are too many specified. So there’s some tuning required depending on the models being displayed. With enough layers, though, complex transparent 3d models can “just work” if the performance is acceptable and quality and correctness are important. It’s otherwise best used with a limited number of layers, however. With WebGPU it may be possible to automatically determine the required number of layers.

And with the same data and some shader work, the multiple layers can be used for some more complex effects, as well. Here’s another experiment from years ago rendering a colored glass effect using a similar technique and darkening the color based on depth between layers:

You can checkout the repository here if you want to see or use some of the Depth Peeling code.

Some other “order independent transparency” techniques that may be interesting to investigate in the future are Per-Pixel Linked List transparent (or k-buffer transparency) and “Multilayer Alpha Blending”. Both techniques keep some variation of sorted list of transparent pixels during rendering that are then sorted. New features in WebGPU may make some of these features more viable, as well.