Hey Guys, I’ve been playing with the virtual geometry idea for a couple of weeks now and wanted to share some results.

What is virtual geometry?

- it’s an approximation of real geometry, such that the viewer can’t tell the difference between the “real” geometry and the approximation.

It’s a lot like LOD, but it’s continuous. With typical LOD systems you have pre-defined LOD levels, like you’d have LOD0, LOD1, LOD2 for example where each next level has fewer triangles. Here’s a picture:

The idea is to simplify mesh on the fly such that triangles that are smaller than, say 1 pixel, are removed.

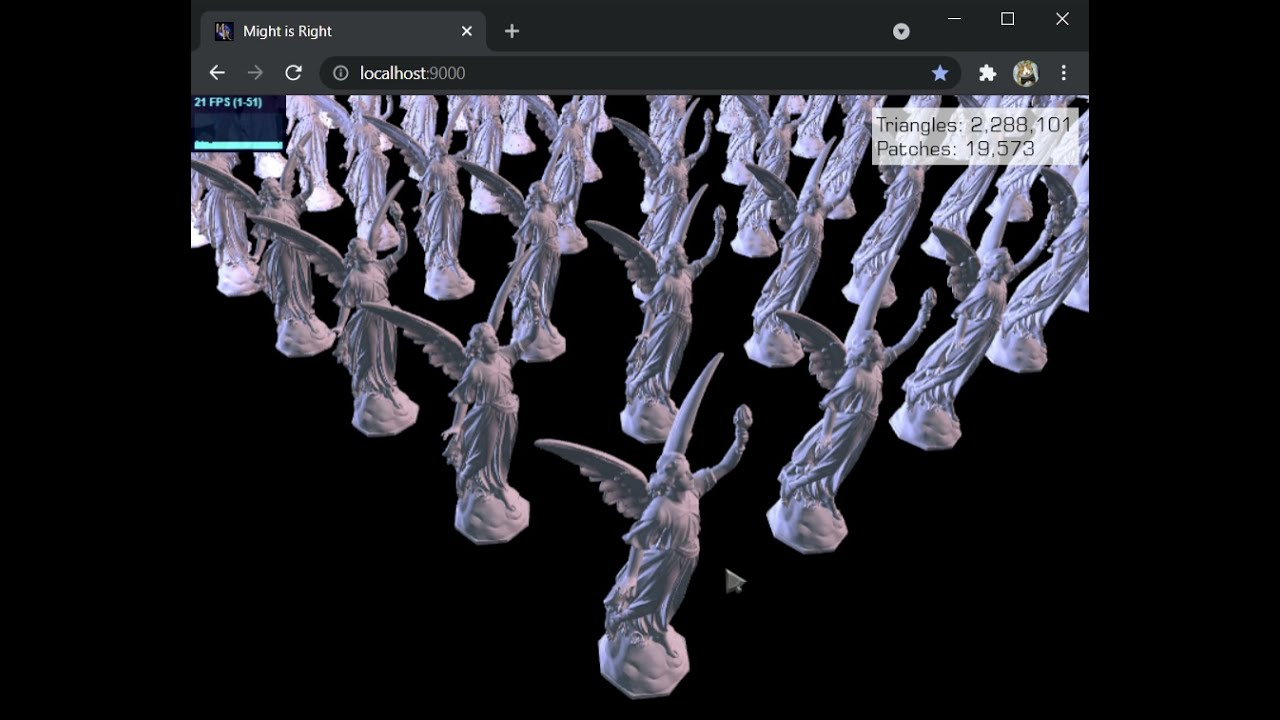

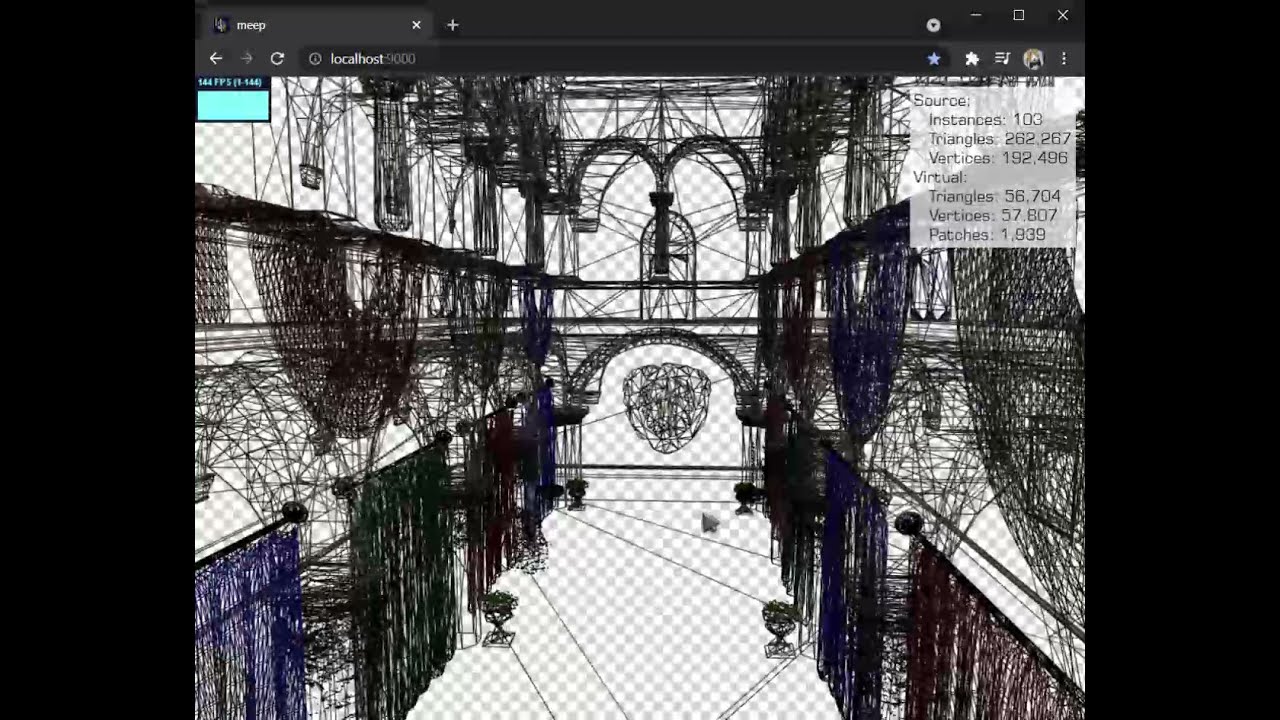

Here’s are a few screenshots for demonstration from my work so far.

What you see here is the “real” source geometry on the left, and on the right is the “virtual” geometry in with debug shader. The source geometry is exactly 100,000 triangles. At this resolution/camera angle virtual geometry actually also has 100,000 triangles. But as we move camera further away, we start to drop triangles.

Here’s we have ~60,000 triangles

A bit further and we get ~10,000 triangles

1000 triangles

That is, it takes GPU to draw 100 of these geometries same amount of effort using my Virtual Geometry system as it does using the original mesh (1000 triangles * 100 = 100,000 same as original geometry).

To stress the earlier point - this LOD is continuous, so you won’t see popping of any kind, and as far as the eye can tell - the mesh will look the same from any angle/distance while actual number of triangles will typically be only a fraction of the original.

The technique isn’t exactly new, it’s been around in various shapes and forms for a long time now. One of the most famous applications is the ROAM algorithm that’s typically used for terrain. Each approach has it’s pros and cons. What I’m doing is much more flexible than ROAM, it works with generic static meshes, but that comes at the engineering cost. My approach, like many others requires special pre-processing of the mesh to create a custom data structure for run-time optimization. As a result, run-time optimization is pretty much instant. The pre-processing takes fairly long right now, around 2s for this geometry.

This is very much a work in progress, and there are still a lot of things to do before it’s ready to be used in production. Still, I think it’s pretty cool, so I wanted to share a bit of the progress