TL;DR; demo

Why are Virtual Textures?

The basic idea is to take a very large texture that would typically not fit into memory, say 16,000 pixels by 16,000 pixels and be able to use it. Why would you want to? A typical example is ground textures in games, if you played a game like FarCry, or, heck, even World of Warcraft, you’d have noticed that the ground doesn’t look the same in a seemingly vast world. Some people might have wondered “How did they fit such a big texture in memory?”, the answers to that would vary, and traditionally we would have used Splat Mapping to achieve a lot of variation. But that’s a cheat and it doesn’t scale beyond a certain level.

What do we do if we want a truly large texture with a lot of custom detail? - this is what virtual textures try to address.

Probably the most famous example of Virtual Texture usage is Google Maps, the actual source textures are ~20 Petabytes, that’s 20,000 Terabytes. Yet you don’t have to wait for all of that to load when you use the service, and it runs pretty quickly.

The technique was, arguably, first popularized and properly defined by John Carmack when working on Rage at id Software. According to John, he wanted to enable a high-resolution world, where there you could walk up to a rock and still see a ton of detail. On top of that, the team wanted to bake high-resolution light maps for the entire game. I’m sure the fact that it’s a really cool tech had nothing to do with it [cough].

At the time, Carmack called the tech “Mega Texture”. Later on, researchers settled on calling the tech “Virtual Texture” or “Sparse Virtual Texture”, although if you ask me - the “Sparse” part is pretty redundant.

What are Virtual Textures?

Virtual texture does for a Rendering Engine what Virtual Memory does for a typical Operating System.

Virtual Memory operates on “Pages”, a page is a fixed-sized chunk of memory, something like 4Kb. Following the analogy, a Virtual Texture operates on “tiles”, which are fixed-sized pieces of the original texture.

Because of this, Virtual Texture tech is typically split into offline and online parts, where offline part refers to preparing a set of tiles out of the original texture and offering various authoring tools. The online part is the rendering, basically.

I’m not going to talk too much about the offline part here, except to say that it gives us an opportunity to create high-quality mip-maps, for this demo I’ve coded up Kaiser filter (same as Unity) with source supersampling, that is - filter takes original image as an input every time, instead of using a previously downsampled image.

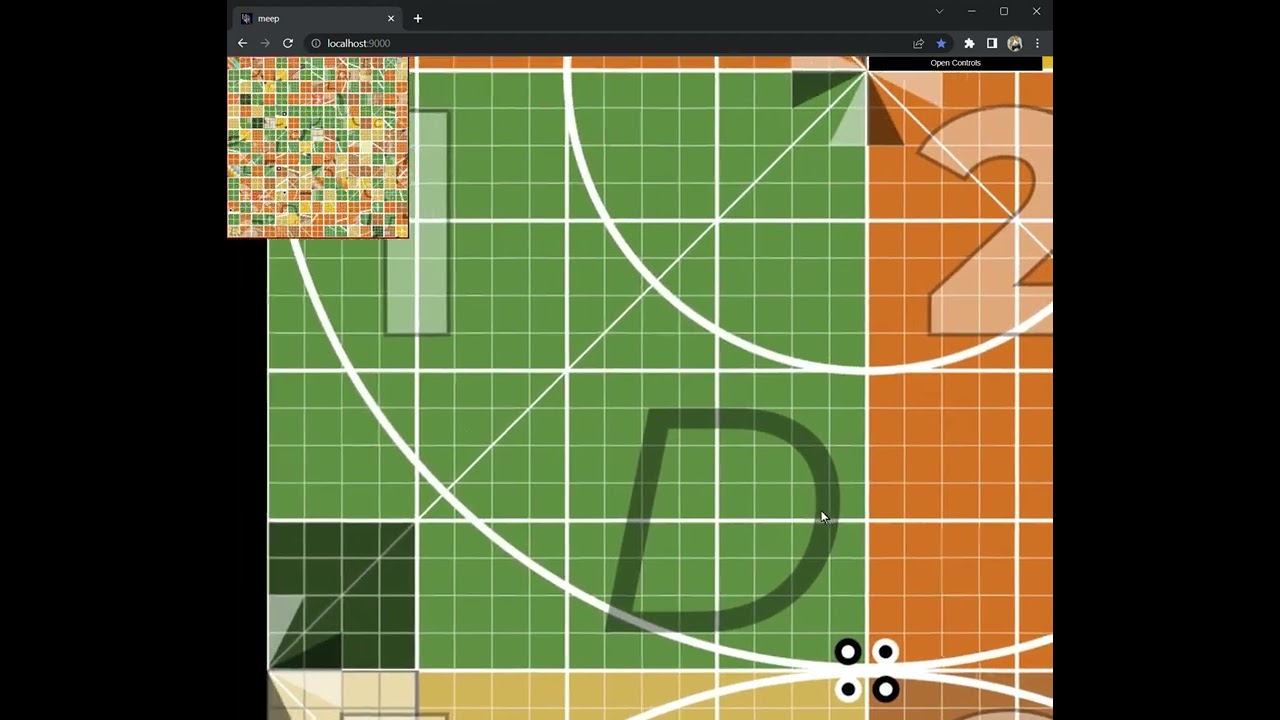

Here’s a 512 MIP of an 8k texture generated by WebGL:

And here’s one generated using kaiser filter:

Here’s a closeup for reference:

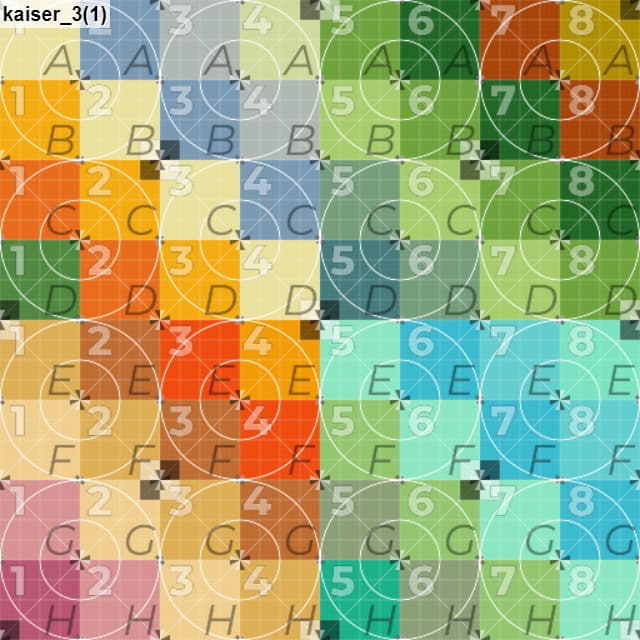

Here’s what the final breakdown of that 8k texture looks like:

Incidentally, there are exactly 5461 tiles, 128x128 pixels each. Why that many?

8192 / 128 = 64, that is, the texture needs to be cut into 64 tiles along each axis, that is 64*64 = 4096 tiles in total, then we scale the image down to half the size and repeat the process all the way down to a resolution of a single tile:4096 / 128 = 32, 32*32 = 10242048 / 128 = 16, 16*16= 256…

First time I saw a virtual texture demo for WebGL was around 2014 I believe, and I was dissatisfied. Back then, I ran it on my GTX 660Ti and I thought it was slow as sin. I was dissatisfied with the runtime performance, the FPS was super low, I was dissatisfied with the loading times, tiles were being loaded but not the right ones, there was no prioritization and the piece of wall I was looking at 1 minute ago was still in the download queue, blocking the stuff I was looking at now. I kept thinking about it though throughout the years, I even wrote some simple prototypes testing out this idea and that. Recently I had a bit of motivation to put an end-to-end proof of concept together, and I’m pretty happy with how it turned out.

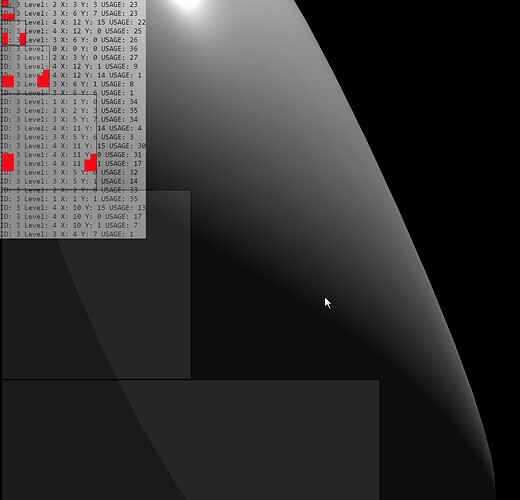

The prototype runs at a very good framerate, at least on my iPhone 8. I implemented a pretty sophisticated algorithm for prioritization of tiles, and I implemented a priority loader with its own queue that keeps the system responsive even in the face of relatively poor network performance. I added a cache in between the network layer and the physical memory layer that lets us reuse tiles without having to download them again, this may seem obvious, but this cache has a limited size, meaning that you as the user get to control how much RAM you’re willing to give up for tile caching versus having to go to the network.

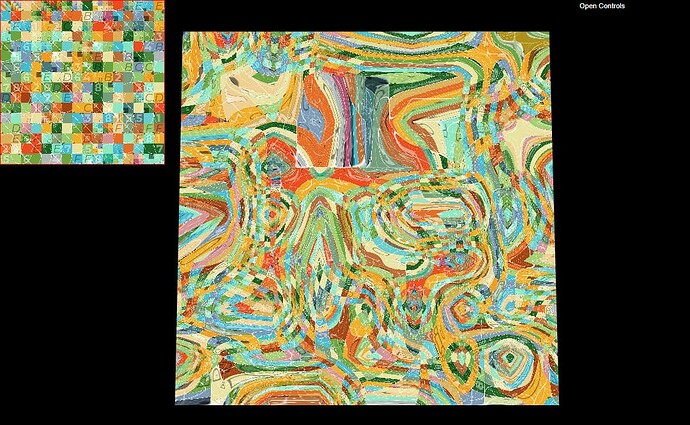

Anyway, I figured that screenshots and videos can only get you so far, and I’m a strong believer in actual demos, so here it is:

The demo uses the aforementioned 8192x8192 texture split into 128x128 tiles. The “Physical Texture” here is 2048x2048 which is just 1/16th of the size of the original texture. Cache is set to 128Mb, which is not quite enough to contain the entire tile set for this demo. The tile set takes up 128*128*4*5461 bytes = 357.9 Mb.

As a stress-test I also tried splitting the original texture into 32x32 tiles, which results in 87,381 tiles. It works well too, but the tech is not really intended for this, typically people use 128 or 256 tiles, based on the literature that I have read. When you put this on the web, smaller tile sizes make even less sense as network latency will kill your performance downloading thousands of tiny files.