Hi, everybody.

I’m having some strange behaviour with colors in shaders.

I first noticed it while trying to implement some sort of highlight functionality in my shader.

I was mixing the texture color (using mix) with a constant color vec4(0, 0.5, 1, 1).

I was expecting this color to be rgba(0, 127, 255, 1) but it resulted in rgba(0, 188, 255, 1) when rendered.

Just to point it out:

127 / 255 = 0.4980... ~= 0.5

188 / 255 = 0.7372... ~= 0.75

I also noticed that this behaviour makes no difference if color is read from a texture or if it’s a constant inside the code.

Where things change is when you load the texture using SRGBColorSpace.

In that case, the color loaded from the texture is correct.

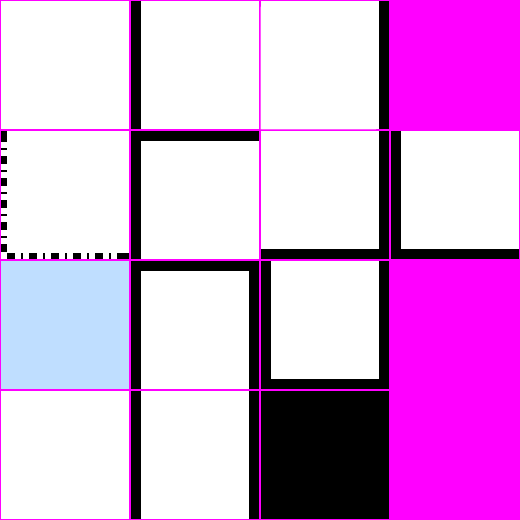

In this example you can notice the difference between the correct color loaded from texture with SRGBColorSpace and the wrong color defined as constant in the code.

They should reference the same color:

rgba(0, 127, 255, 1) = vec4(0, 0.5, 1, 1)

Last but not least…

If you even load the correct color from the texture but then you process it before returning it, for example by mixing it with another color using mix, there’s again a discrepancy with the actual expected color.

In this example, I’m mixing it with vec4(0, 0, 0, 1) with a t value of 0.5.

The starting color is correctly rgba(0, 127, 255, 1) and the expected final color of the mix should be rgba(0, 63, 127, 1).

mix uses lerp function, so:

R: (0 * (1 - 0.5)) + (0 * 0.5) = 0

G: (0 * (1 - 0.5)) + (127 * 0.5) = 63

B: (0 * (1 - 0.5)) + (255 * 0.5) = 127

But the actual rendered color is: rgba(0, 92, 188, 1).

This can’t be a bug: it’s too serious for anyone not to have noticed it before me.

So, what am I missing?

Why is this happening?

Thanks. ![]()