I want to share a (personal) discovery that allowed me to noticeably improve my convolution based denoising filters.

Please don’t take the “sacred” thing seriously, it’s just for the story.

In essence, this is a sampling pattern to approximate an isotropic convolution by taking advantage of GPU bilinear interpolation and that automatically balances out sampling error from bilinear interpolation itself.

It gets rid of these checkered patterns that are inherent to square kernel filters

I haven’t found anything like this particular technique on the web and at least for blur/denoising post processing, this convolution offers a clear quality improvement.

Sacred Geometry and isotropic convolution filters

I was trying to improve the post processing for some real-time volumetric clouds that are generated by raycasting in three.js (article).

Raycasting is necessarily done in discrete steps leading to noise.

There are different techniques for denoising, TAA-like denoising is off the table because it’s too hard to compute motion vectors for clouds so I was left with convolution based techniques.

Convolution and filters

Convolution, everyone knows. You have a sliding window that you pass over a function and you apply an operation on that window to compute the output value.

In 2D when data is discretized into a grid like a bitmap, we’ll use a square window with weights that compensate the discretization bias, like the gaussian kernel below.

Although the weights compensate the discretization bias, this type of kernel brings a certain amount of approximation and aliasing at it’s core. The sampling is not isotropic.

Neural Nets are vastly based on these rectangle convolution operations and in image upscaling for example, there are aliasing artefacts that are difficult to get rid off because the network has to learn to compensate for the error introduced by the discrete 2D convolution on top of it’s actual task.

This image comes from a paper that explains the artefacts caused by “de-convolution” in image upscaling and attempts to solve it. Not exactly the same, but related.

isotropic kernel and bilinear interpolation.

GPU pipelines offer nearly free bilinear interpolation, so why not use it to sample at equal distance from the center pixel and at homogeneous angles? Well, it’s not that simple.

Bilinear interpolation itself is an approximation that linearly interpolates between 4 pixels. So bilinear interpolation will also bring it’s share of error when the sample isn’t exactly at the center of the 4 neighbor pixels. What’s worse is the error will not be the same for all samples, unless perhaps, we find a perfect geometry that allows us to balance out the samples.

Sacred geometry

At that point I was trying out different geometries and trying to balance out the error among samples with weights.. I was afraid that this was getting nowhere and I felt like a schizophrenic drawing nonsense on bits of paper.

But, I found this subreddit which at first glance might be dismissed as a bunch of kooks drawing mandalas. However, they manage to create “beautiful” things and after all, isn’t that what we also reach for in graphics programming !?

therefore, reinvigorated with the confidence that I am not alone looking for beauty through geometry, I returned to the drawing board and found a sampling pattern that, if not perfect, greatly improved my filters.

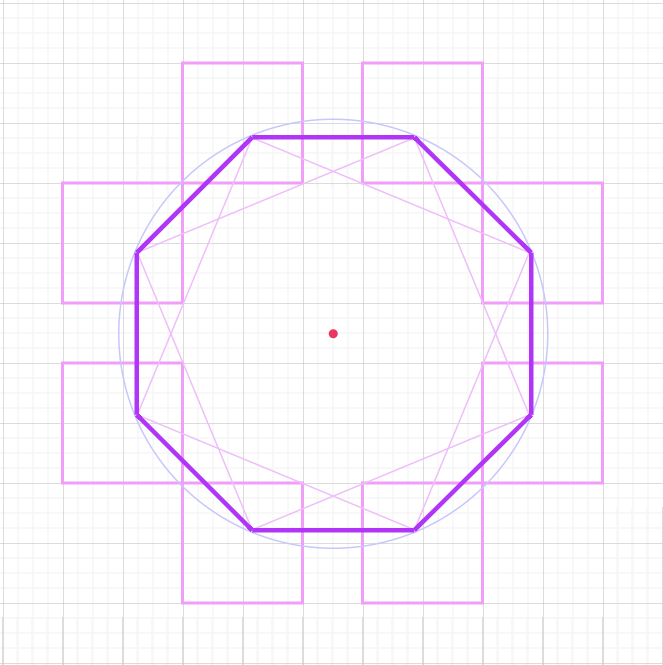

The Octagon

The idea is to sample in an octagon. sampling at 22.5°, 67.5°, 112.5°, etc..

This gives 8 samples plus the center pixel so it has the same cost as a normal convolution when you remove the cost of bilinear interpolation handled by the GPU.

While this doesn’t guarantee the samples are precisely at the center of 4 pixels, we can choose the radius of the kernel to place the samples close to an ideal position.

Moreover, the error introduced by bilinear sampling is balanced among the samples removing the need to weigh them.

Using it in a denoiser

To do an actual convolution with larger radii, I re-used concepts from “A trous” wavelet transform adapted to approximate a convolution operation.

A trous (with holes) performs a convolution iteratively with the same number of samples each iteration but increasing the distance from center-point each time. So, it approximates a convolution with a larger kernel.

In this isotropic approximation, I’ll do the same but I pick the radius of the kernels so that samples are close to the 4 pixel intersection.

In order to denoise an image of clouds, the samples are weighed based on luminance and depth difference. The base weights are 1/4 for the center pixel and 3/32 for the other 8 samples

Results

With extra contrast

The “sacred” sampling pattern removes the checkered aliasing artefacts that come with rectangular convolution kernels. It doesn’t seem to particularly modify the denoising capacity of the filter, nor the amount of blur it brings.

Is it worth the extra cost of the bilinear interpolation done by the GPU pipeline?

In the denoising scenario, yes! These filters are relatively cheap and we can reach the same “denoising” effect with a softer blur.

Other use-cases

I also use this when rendering gaussian splats without sorting but using alpha-hash transparency instead. In that case, TAA is very useful but has to be strong which produces ghosting. A pass of the sacred denoiser allows me to tune down the TAA and reduce ghosting.

I will try to adapt the FXAA algorithm. I’m not certain if the use of bilinear interpolation will harm the edge-detection part.

Could this kind of convolution be used in convolutional neural networks? I don’t know enough about those GPU pipelines to know if bilinear interpolation is fast in that case and I don’t know how much the bilinear interpolation will harm the edge detection capability of the kernels.

Does the error introduced by square convolutions stacked in multiple layers dilute, or does it accumulate in subtle ways? It would be fun to implement a layer in tensor flow or something to see how it affects the training.