Hello! How’s going?

I’m working on a offsceen canvas model visualizer for fine art prints that we make in our creative studio. It’s a PBR workflow and so far, it looks really good. However, at certain angles, the aluminum material from the back of the object will cause a lot of specular aliasing on it.

It was a lot worse before because the front face of the aluminum object (just behind the artwork plane) would be lit by the environment map, so I had to set a black 1.0 roughness material to it. Good workaround! Still, what’s left is the specular aliasing from the sides of the aluminum back.

Things that I’ve tried so far:

- FXAA. It only made everything a bit blurrier. Specular aliasing kept being the same.

- MSAA. It does improve things a bit, but not that much. Going above x4 not only did not improve specular aliasing at all, but it also became a resource hog. My implementation runs a small benchmark to determine if MSAA should be enabled, and also if resolution should be lowered. MSAA would be disabled in a lot of mobile devices, resulting in worse aliasing. Interestingly enough, geometry aliasing is not that bad compared to the specular ones, which are really distracting and prominent.

- SMAA. Compared to FXAA, targets the edges a bit better, leaving the rest of the image looking sharper. Still, like FXAA, it didn’t help at all.

- Setting the HDRI map to work with PMREM. AFAIK, it should work out of the box without really calling PMREM manually. It did nothing, it looked the same.

Things that will make it worse:

- Beveling and curving the edges of the glass and aluminum in Blender. While it does make it more visually realistic at certain angles (really, it is an upgrade in realism) and I would love to have it, it caused soooo much specular aliasing, fireflies and sparkling at the silhouette that I can’t justify to have. I also made sure the normal map of the aluminum didn’t map in the bevels, but still the problem persisted. It even happened with the glass. The current demo in the website is the model without bevels because honestly, it was that bad. Not even MSAA x8 could save it.

Here is where Geometric Specular Antialiasing comes in

I do believe this is a problem that should be mitigated from the shader pipeline. Valve explored this concept years ago when they began exploring VR. Highly recommended to watch their talk. They had to tackle aliasing as best as possible in both performance and visual terms, because having aliasing + low framerate in VR it just degrades the experience so much.

They came up with a cool math which, to my understanding, will increase the roughness values of the pixels that will be prone to have high specularity values when viewed at specific camera + geometric angles. Correct me if I’m wrong about it.

Here is their code:

// Dense meshes without normal maps also alias, and roughness mips can’t help you!

// We use partial derivatives of interpolated vertex normals to generate a geometric

roughness term that approximates curvature. Here is the hacky math:

float3 vNormalWsDdx = ddx( vGeometricNormalWs.xyz );

float3 vNormalWsDdy = ddy( vGeometricNormalWs.xyz );

float flGeometricRoughnessFactor = pow( saturate( max( dot( vNormalWsDdx.xyz, vNormalWsDdx.xyz ), dot( vNormalWsDdy.xyz, vNormalWsDdy.xyz ) ) ), 0.333 );

vRoughness.xy = max( vRoughness.xy, flGeometricRoughnessFactor.xx ); // Ensure we don’t double-count roughness if normal map encodes geometric roughness

// MSAA center vs centroid interpolation: It’s not perfect

// Normal interpolation can cause specular sparkling at silhouettes due to over-interpolated vertex normals

// Here’s a trick we are using:

float3 vNormalWs : TEXCOORD0;

centroid float3 vCentroidNormalWs : TEXCOORD1;

// In the pixel shader, choose the centroid normal if normal length squared is greater than 1.01

if ( dot( i.vNormalWs.xyz, i.vNormalWs.xyz ) >= 1.01 ) {

i.vNormalWs.xyz = i.vCentroidNormalWs.xyz;

}

The talk slides where they mention this are here and the specific timing in the video presentation is here.

My (failed?) attempt to implement it

I’m just getting started in the 3D world. I know pretty much nothing about shader code. All I could do is dump the compiled PBR shader code in three.js and tried to inject Valve’s code where material.roughness is calculated. Although I could load properly load the shader, it didn’t seem to antialias anything.

Valve’s code to Three.js looked like this:

vec3 normalW = inverseTransformDirection( normal, viewMatrix );

vec3 nDfdx = dFdx(normalW);

vec3 nDfdy = dFdy(normalW);

float slopeSquare = max(dot(nDfdx, nDfdx), dot(nDfdy, nDfdy));

float geometricRoughnessFactor = pow(saturate(max(dot(nDfdx, nDfdx), dot(nDfdy, nDfdy))), 0.333);

material.roughness = max( roughnessFactor, 0.0525 );

material.roughness = min( max(material.roughness, geometricRoughnessFactor ), 1.0 );

Note that I might be completely off in my assumption of what vec3 normalW and the rest of the values are because as mentioned, my knowledge about shaders is almost non existant. I also skipped the centroid part because I have no idea how and where to place that code.

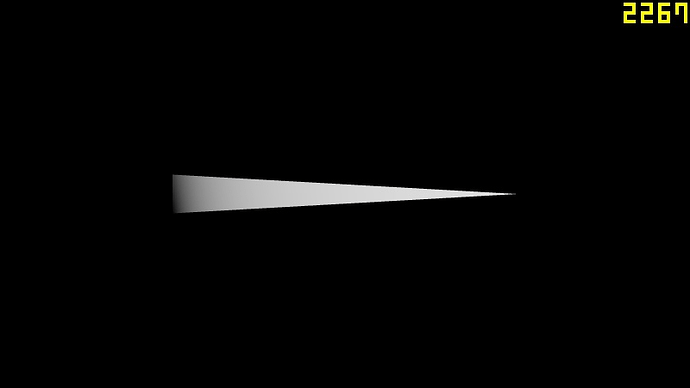

Here’s geometricRoughnessFactor dumped directly using gl_FragColor = vec4(geometricRoughnessFactor * 6.0, 0.0, 0.0, 1.0);

Keep in mind I’ve amplified the value x6 to make the lines at the edge look better in the screenshot.

Moving the camera results in smooth edges reveal with little to no aliasing. But for some reason this doesn’t really translate into further specular calculations at all. Seems like the values are not sufficient, although I’m not sure.

Thoughts? Can this be implemented in three.js?

I believe specular aliasing is one of those problems people would quickly point out TAA as the solution to it. I don’t think it is. If the problem lies in the shader pipeline, TAA would be a rough patch on top of it. It won’t solve the problem completely. Furthermore, proper TAA with motion vectors (if I understand correctly) would be a complement to proper geometric specular antialiasing implementation, creating smooth pleasing edges when objects and cameras are in motion.

I do think Valve’s approach is pretty clever when it comes to good performance + lovely visuals. It is a low hanging fruit! Tackling this from the shader pipeline would be a good idea, or at least to have it as a toggle.

And please if someone knows how to properly modify the shader code to make this to work, it would be incredible <3. Thank you!