I’m in … It’s not as advanced as I thought, I tried some GLSL prompts and none of them was conclusive, maybe I asked too much, but I’m still nonetheless impressed, right now I’m using it to translate some python code to typescript, it’s a great personal assistant.

Actually it’s sooo dumb, that it’s anything but scary.

Developing a great application requires an enormously wide range of acquired traits and talent, like knowledge, wisdom, experience, maturity, problem solving, inspiration, vision, usability intuition, common sense, decision making, even philosophy of life and ethics, since you’re dealing with human beings.

So in comparison to that , this artifact is around 0.0001%, which is scarily hilarious!

There is a difference between mimicking actions, versus possessing the knowledge, wisdom and experience in order to make such actions. The only scary thing is that people put their hopes and fears on such black-box automation artifacts.

Even in comparison to today’s era of mediocracy - not that on average everything is 50% of perfection(!), we would be extremely happy if that was the case - but in terms of effort, because a medium effort of everyone results in a chain of exponential reduction of overall quality, as the next creators have to build on top of previous mediocre results, which leads to gradually worse and worse results, while skyrocketing the required effort to insane amounts and zeroing efficiency.

Even in comparison to that, - a 5% of average quality vs reasonable perfection in my estimation, it has no chance.

That said, I’m not against smart tools, we all want smart tools, but right now we don’t even have the obvious and fundamental ones. Just look at the incredibly dumb search engine results, the minimal developing feedback and organization tools available, the extremely poorly made languages like Javascript despite being used by billions, the lack of science (read: effort) to solve the “all programs have bugs” epic issue, etc, etc.

The most important task of a programmer isn’t to write code. Software is a necessary evil, the less software you have that can do a task, the better off you are.

Rather, the most important task of a programmer is to understand the problem to be solved in a logical and rigorous way. This includes not only accounting for the happy path (where everything works) but accommodating all of the sad paths as well. Without that understanding, code is just damage - the more code you have, the more you spend maintaining it.

We are not yet at the point where an AI can do this most important function. I don’t think we are near to a place where a customer can tell an AI what they want, because most customers don’t know what they want, not in sufficient detail. Someone has to explain the trade-offs around speed, reliability, scale, cost, and other negative externalities. Someone has to explain that in order to do X you can’t get Y at the same time.

Also, at some point you are going to need to debug the code. Even if the code is perfect, the world around it isn’t. Debugging means you need to understand how it works.

I was able to get ChatGPT to do some simple fragment shaders I will look into it a little more. AI request - 'Write javascript code in Threejs add a cube and create a shader gradient on the cube"

Basic Animated Gradient Fragment Shader Example Generated from ChatGPT

// Set up the renderer

var renderer = new THREE.WebGLRenderer();

renderer.setSize(window.innerWidth, window.innerHeight);

document.body.appendChild(renderer.domElement);

// Create the scene

var scene = new THREE.Scene();

// Set up the camera

var camera = new THREE.PerspectiveCamera(75, window.innerWidth / window.innerHeight, 0.1, 1000);

// Create the gradient background shader material

var backgroundMaterial = new THREE.ShaderMaterial({

uniforms: {

u_time: { value: 0.0 }

},

vertexShader: `

void main() {

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

`,

fragmentShader: `

uniform float u_time;

void main() {

gl_FragColor = vec4(

sin( u_time + gl_FragCoord.x * 0.01 ) * 0.5 + 0.5,

sin( u_time + gl_FragCoord.y * 0.01 ) * 0.5 + 0.5,

sin( u_time ) * 0.5 + 0.5,

1.0

);

}

`

});

// Create a full screen quad to render the background

var background = new THREE.Mesh(

new THREE.PlaneGeometry(2, 2),

backgroundMaterial

);

// Create blue cube

const geometry = new THREE.BoxGeometry(3, 3, 3);

// const material = new THREE.MeshLambertMaterial({ color: 0x0000ff });

const cube = new THREE.Mesh(geometry, backgroundMaterial);

scene.add(cube);

// Create spotlight with shadows

const spotLight = new THREE.SpotLight(0xffffff);

spotLight.position.set(10, 10, 10);

spotLight.castShadow = true;

scene.add(spotLight);

// Create ambient light

const ambientLight = new THREE.AmbientLight(0x404040);

scene.add(ambientLight);

// Set up camera

camera.position.z = 5;

// Animate the gradient

function animate() {

requestAnimationFrame(animate);

backgroundMaterial.uniforms.u_time.value += 0.05;

// Rotate cube

cube.rotation.x += 0.01;

cube.rotation.y += 0.01;

renderer.render(scene, camera);

}

animate();

Basic Gradient Fragment Shader Example Generated from ChatGPT

// Set up the renderer

var renderer = new THREE.WebGLRenderer();

renderer.setSize(window.innerWidth, window.innerHeight);

document.body.appendChild(renderer.domElement);

// Create the scene

var scene = new THREE.Scene();

// Set up the camera

var camera = new THREE.PerspectiveCamera(75, window.innerWidth / window.innerHeight, 0.1, 1000);

// Create the gradient background shader material

var backgroundMaterial = new THREE.ShaderMaterial({

uniforms: {

u_time: { value: 0.0 }

},

vertexShader: `

void main() {

gl_Position = projectionMatrix * modelViewMatrix * vec4( position, 1.0 );

}

`,

fragmentShader: `

uniform float u_time;

void main() {

gl_FragColor = vec4(

sin( u_time + gl_FragCoord.x * 0.01 ) * 0.5 + 0.5,

sin( u_time + gl_FragCoord.y * 0.01 ) * 0.5 + 0.5,

sin( u_time ) * 0.5 + 0.5,

1.0

);

}

`

});

// Create a full screen quad to render the background

var background = new THREE.Mesh(

new THREE.PlaneGeometry(2, 2),

backgroundMaterial

);

// Create blue cube

const geometry = new THREE.BoxGeometry(3, 3, 3);

// const material = new THREE.MeshLambertMaterial({ color: 0x0000ff });

const cube = new THREE.Mesh(geometry, backgroundMaterial);

scene.add(cube);

// Create spotlight with shadows

const spotLight = new THREE.SpotLight(0xffffff);

spotLight.position.set(10, 10, 10);

spotLight.castShadow = true;

scene.add(spotLight);

// Create ambient light

const ambientLight = new THREE.AmbientLight(0x404040);

scene.add(ambientLight);

// Set up camera

camera.position.z = 5;

// Animate the gradient

function animate() {

requestAnimationFrame(animate);

backgroundMaterial.uniforms.u_time.value += 0.05;

// Rotate cube

cube.rotation.x += 0.01;

cube.rotation.y += 0.01;

renderer.render(scene, camera);

}

animate();

I am going to add some of this to my website and YouTube Channel https://www.shanebrumback.com https://www.youtube.com/@brumbacksd100

@dllb & @viridia I’m not sure I entirely get your pov, I’m certainly not smart enough to code a full on browser or a search engine for instance, you have to imagine that this chatGPT app is just what’s being made publicly available, it’s training resources have been rapidly limited and switched off practically, I’m 100% as I’m sure we could all agree that there will be huge companies currently funding huge amounts of research into this tech of which there are likely models which are being run against eachother in a GAN environment to outdo the issues and prerequisites you’ve mentioned. Although what’s being made publicly available in terms of AI is still very primitive, this chatbot is still very much a more advanced version of say alexa or siri, as @Fennec has suggested, it is a pretty useful personal assistant to access information you may not have had any idea about previously.

Last night I prompted to create a smooth scroll function which in all fairness did a better job than lots of open source smooth scroll libraries let alone my limited capacity to grasp the concept…

@dllb & @viridia I’m not sure I entirely get your pov, I’m certainly not smart enough to code a full on browser or a search engine for instance, you have to imagine that this chatGPT app is just what’s being made publicly available, it’s training resources have been rapidly limited and switched off practically, I’m 100% as I’m sure we could all agree that there will be huge companies currently funding huge amounts of research into this tech of which there are likely models which are being run against eachother in a GAN environment to outdo the issues and prerequisites you’ve mentioned.

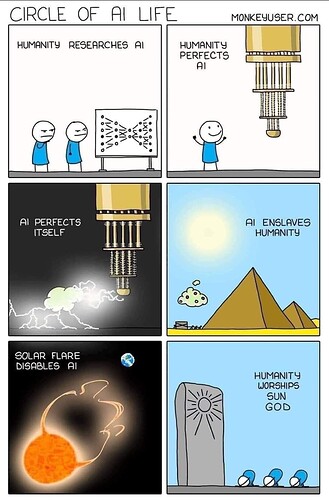

Exactly, you’ve been left with a semi-implemented toy, while the real powerful stuff is in the hands of the few.

It’s already apparent that AI is meant to be used 99% to control people, and 1% to asist them, hence the limited public access. (And they want your phone number to let you try that toy…)

Eventually there will be bills that will give more privileges and rights to A.I agents, than humans.

-“A.I said so, so you must obey!”

-“You dare question A.I?”

-“Oops, A.I was wrong on that decision that affected millions of people, many people died, but hey, if it were humans it would be worse!”

And so on. Meanwhile you won’t have a clue about how it arrived to those decisions and who controls it.

Eventually, it will become the new forced religion, people will have to believe that A.I is always correct, and occasionally it acts in “mysterious ways”.

It’s the irony/insanity of investing on black-boxes with billions of auto-generated parameters auto-pilot solutions, instead of advancing the science, education and organization to solve our problems and process that knowledge too.

It’s the underestimation of reality of problem-solving and human capacity.

And most importantly, it’s the huge underestimation of the problems that this investment will create.

Aside surrendering knowledge and control (and thus freedom), there will be A.I stupidity, A.I insanity, and A.I paranoia that will drive people mad, to name a few.

Those are artifacts of intelligence. Slightly robotic-unnatural-insanity fed daily to our lives, deteriorating our natural, healthy perspective.

That’s basically all to be said honestly and the ugly truth.

It’s not just that, but using sphere geometries as points not actual points or instancing, 32x32 segments etc. Yes it might be a working thing and you might say beginners could benefit from it, but it’s what beginners will get served they assume to be the “standard” or even best practice.

Yeah I suppose that’s the long term synical / pessimistic scenario playing out, of course at this stage these models could be trained on only the deadliest way of affecting humanity but again, we’re not forced to follow doctrine and there’s always going to be for lack of a better term power hungry bellends that seek to dominate and control but I’d like to think the majority, as you say, won’t submit to procreation with these types of people, AI is not actually AI if programmed biasedly in this way and something that will be ignored I hope…

@Lawrence3DPK , @dllb, @viridia A lot of philosophical questions are raised here, proof of true revolution a turning point, I bet a new verb will be added to the dictionary, like we did with google, like I said before, whether we like it or not doesn’t matter, this is the future this is where we are heading, and you know what they say “If you can’t beat them join them” or in this case just use them.

And the 99% versus the 1% argument can be applied to any major company/organization/nation/group on any field, from Coca-Cola to Nike to Google, it’s just the way it is.

@Fyrestar If you take a look a the second example you’ll see it using Geometry with the position vertices and a Points object, I know it’s an old version of three.js but still impressive.

@Shane_Brumback What have you done, you’ve just opened the pandora’s box here ![]()

I agree it’s aweful practical code but again someone with no knowledge could build a simple scene and soon see on runtime “oh that’s just crashed my browser, I wonder why” welcoming them to the wonderful world of optimal practice and usage… I refer to my previous statement that a fork of this used in an environment like discourse or the 3js docs where the AI is linked in itself to learning material based around a very specific workflow could be very useful, imagine if you could go to an arbitrary page in the 3js documentation, let’s say buffer geometry and type “how do I implement the animations of vertices / positions of a buffer geometry” which could not only give basic to advanced usages based on prompts but also find and link to the most relivant resulting questions previously resolved here on discourse… I guess finding the optimal way to enhance communities with this technology rather than feel beaten by it…

It’s the naive thinking that a single piece we got as service today is the thing people would be controlled with, all AI is fed with data from humans, may it be conversations, videos, art, code etc. That people get excited over it and use it (at a point it’s still like an alpha), obviously helps in improving it.

And it’s already used in the way you claim is “just a pessimistic scenario”. Goverments use these to control communication, to profile the mindset of people. It’s not just china but also the west (at least Europe) wants to make “chat control” a default thing making privacy illegal and anyone critical about the goverment being flagged as enemy.

I really wonder why there is no serious larger outrage about how certain AIs are based on theft, art sites let AI services harvest peoples art without permission, even unlicensed stock images, GitHub harvested all repos including private ones.

And what’s the real benefit then? As beginner you don’t know what you got served is anywhere close good practice, if it looks working it works for them, and even assuming it would improve to the point it’s really good, you ain’t learn any real skills yourself, and to the point of it can do anything, nobody needs you anymore to write a prompt everyone can.

It doesn’t mean it beats big complex high optimized projects, but it certainly will put the non-nerds out of business. Those parts typical ad-agencies usually do for instance.

Just thinking of it only as an aid and blend out the negative aspects which are massive is just as naive as thinking automation creates more jobs in general. It’s just the beginning, it’s not like AI couldn’t be used for good, but it’s not going to be used to benefit everyone at all, it’s used for control, censorship and to make few rich richer as we experience it already.

There also was no benefit for humanity at all in creating art/media generating AI, it’s destroying the future of people wanting to work in the creative area, in the comments on many videos and articles you already see how people even gave up on studying it, the “just assistance” isn’t true, it may be for it’s current stage, but people already scam clients with it, commissions and money that would have went to real working artists, doing their job out of passion and love as something that fulfils their life.

There ain’t everyone being a Picasso and everyone seeing each other as Picasso complimenting each others non-selfmade “work”, everything automated becomes inflationary and worthless. What people admire and pay you for is rare skill, creativity and talent.

@Fyrestar Well … We are doomed anyway, so I’m gonna use and abuse it till the day it replace me.

So, apologies for getting all philosophical here and possibly getting off topic.

Some researchers who have thought about superhuman AI and ethics have posed the following thought experiment: imagine that you have a genie that will grant you three wishes, who can do anything - the only caveat is that the wishes apply not only to yourself, but everyone, from now until the end of time. Also, the genie only does what you tell it to, it can’t read your mind to know what you really wanted.

And let’s assume that you are a socially conscientious person and want to use this power to make the world a better place. Unfortunately, any “simple” wish could turn out horribly wrong - for example, if you wish to make everyone happy, the simplest solution is to modify everyone’s brains so that the only emotion they can only experience is happiness. By the same token, you can eliminate unhappiness simply by killing everyone. This is probably not what you want.

In fact, no matter how smart you are, or how careful you are to phrase your wish, there is simply no chance that you will not screw this up badly. Any statement of desire that can fit within a few words simply cannot capture the true complexity of our moral and ethical values, the exceptions and judgment calls that we make every day.

So, when you ask a powerful AI to do something for you, it’s like making a wish to a genie. You have to be careful what you wish for, and the more powerful the AI, the worse the consequences of getting it wrong. (Fortunately our current AIs are not yet that powerful!).

Thus, there is a need for a type of human specialist that can communicate wishes to the AI genie, and do so in a way that minimizes the negative consequences. Such a person would be trained to think logically about unintended consequences and potential trade-offs, to minimize the potential harm of the wish. Even if the AI is built with some basic safe-guards in mind (don’t kill all the humans), these safeguards will be “generic” in that they represent the values of the average person, and not necessarily be in perfect alignment with your own values - so for example, you and the AI safeguards might have different ideas about, say, sustainability or fair labor conditions.

As someone who has worked as a senior level engineer for many years, this kind of “careful wishing” is a lot of what software developers do at the most senior levels - that is, think logically and carefully about the consequences of a customer’s needs. (And in fact, this is basically what a Technical Requirements Document is - a carefully worded wish.)

Even if, in the future, we call the kind of person who does this something other than “programmer”, I think there will be a need for people with that skill.

Those who are interested in this topic and possible consequences, I highly recommend the transhumanist fan-fiction stories Friendship is Optimal, in which a superhuman AI designed to provide a My Little Pony MMORPG ends up taking over the earth. It’s very well written and explores some of these ethical issues in considerable depth.

I’d call such a person aier (program → programmer, AI → aier)

negotAItor ![]()

![]()

![]()

I asked Chat GPT to describe the webgl-animation-keyframes example code in the form of an article. The result was a long winded description of the code. When I asked it to summarize, here’s what it said.

“This Three.js code example demonstrates how to animate a 3D model using keyframes. A 3D model in the GLTF format is loaded into the scene and an AnimationMixer is created for the model. The mixer plays the animation stored in the GLTF file, which includes keyframes that define the positions, rotations, and scales of the model over time. The AnimationMixer updates the animation every frame based on the time passed since the last frame, determined by the THREE.Clock object. The animation and scene are then rendered and displayed in the web browser.”

I’d say that’s a pretty accurate summary.

One idea to get Chat GPT to generate better threejs examples is to ask it to summarize the code for each threejs example.

Using that explanation, ask it to generate an example matching the summarized description. Usually the answer is not correct.

Then provide the correct answer from the original example code as feedback.

Any takers?

One day it could be a member here, providing answers to questions and solutions to problems.