Added support for HDR environment maps, mostly the effort of converting them into the format that Shade works with.

Also worked a bit more on the global illumination, added infinite light bounce, so light gets pretty much everywhere now. Still a few techniques I’d like to add such as resampling of rays, but it’s already in a good state and is pretty stable.

Here are a few shots (off/on):

If anyone is curious about the environment mapping. I was frustrated with the cube maps, as I make extensive use of directional maps. So I’m encoding environment maps to octahedral projection like so:

The starting point is a standard equirectancular map

There is a cost to conversion in terms of quality, as we lose some resolution certain areas, but I found this not to be an issue.

The benefits are:

- lower angular error around edges, that is - texture spaces is more efficiently used

- You have a 2d texture instead of a cube map, which is way easier to work with, especially when you need to write to it, such as during convolution.

- octahedral mapping is dirt cheap in the shader

The GI solution is slightly changed from before. I was struggling to get good results with progressive upgrades when I was using spherical harmonics, for various reasons. So I gave up and jumped on the band wagon of just keeping an atlas of octahedral maps for each probe.

I rewrote the rendering part for them to generate a G-buffer using ray-tracing, and then reuse a lot of the same code for shading. During shading of the probe texels I sample probes again, which gives me that sweet infinite light bounce.

To get stability I do a simplified version of TAA.

Everything is done on the GPU, including probe placement generation. The limit for number of probes does exist, due to memory limitations, but it’s stupidly high, at 65,536 for low-end hardware. If you need more, you also have an option of dropping the probe resolution, which will reduce GI precision a bit, but give you more probes to work with.

Updates are incremental. I don’t have any fancy logic for now, just updating a fixed number of probes each frame, but it’s configurable, again, so you can adjust it to what the user’s hardware can handle comfortably.

GI is very responsive, I didn’t do super precise measurements, but updates to lighting propagate fully in ~30 frames. This is mostly thanks to TAA.

Right, also the probe placement is automatic and can be updated at runtime if scene changes. You need to tell the engine your desired resolution for the scene, and it will place probes roughly on a grid of such resolution, removing probes that are nowhere near anything and moving probes around a bit to make sure they don’t get stuck in walls or tight corners.

Here’s a crappy visualisation of probe placements

You can see these ones having been nudged into better positions

This is not particularly complex stuff, but it does require you to have spatial index on the GPU, which I happen to have already so - easy win.

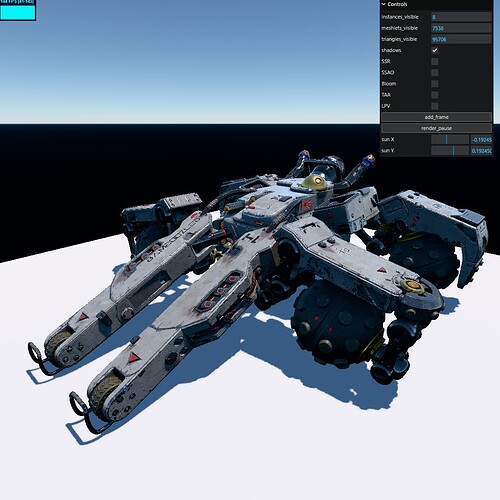

To show that probe placement is not limited to box-ish scenes, here’s the Battle of Trash God:

Here’s the probe atlas looks like:

but the resolution for each probe is overblown here to 128x128, in reality we use 8x8 pixel probes, sounds mad - I know, but it’s 64 individual samples, and we accumulate them over time, so it’s plenty.

Here’s 64x64 atlas

32x32

and finally 8x8 what we actually use

there are 8553 probes packed here.

Here’s what that looks like:

If we drop probe resolution to 4x4:

at 2x2 (yes, this is only 4 pixels per probe):

And here’s without GI

Basically as resolution drops too low - probes become “blurry”, they accumulate light from too wide of an angle, and start to approximate point light sources.