Hello people,

Quite a speculative and almost conspirative subject for the time being but…

as I’ve seen a few specific companies gaining huge capital traction towards the development of holographic technologies, my thoughts are that It’s likely at some point in the future, efforts will be made to bridge the browser to what I can only conceptually assume to be a "holographicRenderer "

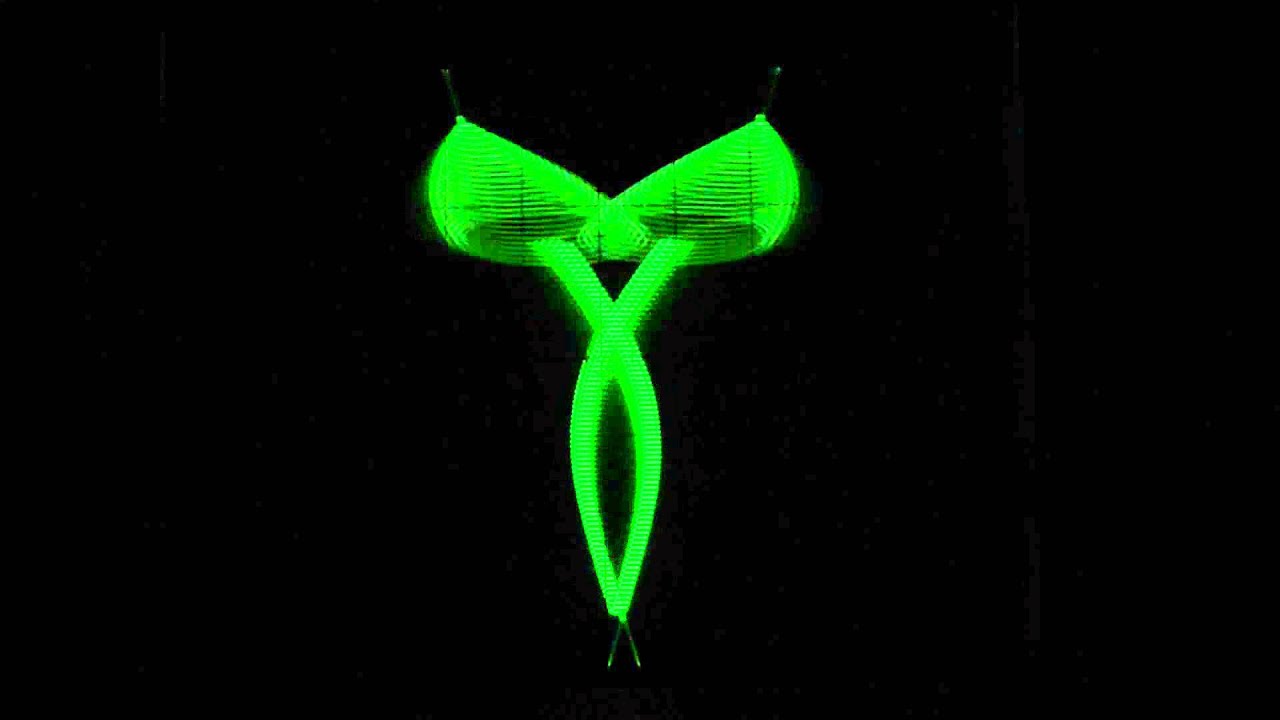

Coming from a background in audio and 3D and seeing what can be achieved with a pure audio stream on an oscilliscope examples as such…

Also examples of using sound for particle suspension and seperating densities of liquid such as…

As well as examples of midi audio being (very primitively) projected through tesla coils in square wave electrical arcs.

The next statement may seem way out there but would it be wrong to think that any such concept of a “holographicRenderer” would need to calculate pixel projection in an environment that is not screen based but a canvas derivative of subharmonic relativities between the combination of both light (to display visual information) and sound to “suspend” or “distribute” or “project” the given light visual information onto the given “3D” canvas?

I know this may be way off topic to anything ralative right now and mods can feel free to remove the topic but thoughts around future concepts of evolving technologies such as threejs I find very interesting and I feel can evoke a sense of driven inspiration.