![]() That’s awesome

That’s awesome

probably not Portals (forked) - CodeSandbox or at least im not good enough to sculpt a twirling crease. maybe with a bit of twirl around the edges it could be OK.

I immediately have to think of a depth texture. I use this to reconstruct the 3D world when raytracing my atmosphere or my clouds in the shader so that every ray knows exactly whether it hits an object. Or am I misunderstanding something?

The rays hit the sphere geometry, so the clouds behind them are not ray traced. Every ray knows exactly whether he hits the sphere or not.

The clouds cover the sphere. Since they are in front of the sphere.

You can download my repo here:

I can still improve a lot of performance and I know exactly what I have to do with ray tracing to improve it. My illness is that when I consider something to be basically solved, I move on to the next unsolved challenge, because I still have a lot to do in my entire project.

The noise in the clouds is because I do the ray tracing very roughly. Too few numerical integration steps!

Improvement: First a rough tracer ray. If the cloud density is detected as greater than zero then take a tracer ray step back and then advance again with finer ray steps.

I’ve tried to keep this cloud demonstrator repo as simple as possible, but to make real looking clouds, it takes some formula magic. You can add any objects to the scene. 3js renders a depth texture in each frame that is loaded into the cloud shader. Then the 3D world is reconstructed in the shader and then the clouds are generated.

@abernier: Maybe my clouds repo will help you. Anyway, if you need to know exactly when a ray hits an object in the shader to stop or start ray tracing depending on what you want to do, then that’s the solution.

can only be reliably used for rays coming from one side, is it not? with relativistic effects we often see the sides of objects that are not normally visible, like the back side. so again, maybe if you have some sort of vertex shader that unwraps your objects in a way that you see all (or the most) of its sides and make a depth map of that… which is another can of worms, I guess

I am saying all of this assuming we are talking about rendering 3D objects that are nearby or crossing the wormhole - it’s irrelevant for distant things

Yes, you’ve right. This is only a solution for the side visible in non-curved spaces or for ray tracing at close range when something interrupts the ray. If you want to bring light from a geometry object to the other side, things get complicated. I had also read about this hurdle in the paper while skimming. I’ll read it more carefully because now I’m curious

From paper: “After specifying an observer location and a looking direction through the camera, the beam generator of the respective space-time takes on the task of to calculate the associated light ray by integrating the spatial geodesic equation and from this creates a polygon.”

Explanation: So he doesn’t do this in a shader, but he creates a polygon curve for each light beam with the course of the light beam, which he calculates for the light using the relativistic equation.

From Paper: This consists (the raypolygon) of an array of four-dimensional points.

Since the light rays are now described as polygons, all scene objects with an Intersection calculation can be upgraded, which tests each segment of the polyline for intersection with the object.

It is precisely these complex intersection calculations that make it necessary to parallelize the image calculation.

Explanation: So the whole thing is done in the geometry world and not in shaders. That would then correspond to a section-by-section (polygon section of the light) 3js object raytracing.

Even if the dissertation is a few years old and the computing power of computers has increased since then. I don’t think that can be implemented in this way, as it would still require far too much CPU computing effort today. We don’t work with scientific mainframes with hundrets of CPU cores for multithreading.

Even if that is not a practicable solution, I hope that with the explanation of the paper I have at least brought some clarity to the matter. At least we now know how it was done. It’s important to me to understand things

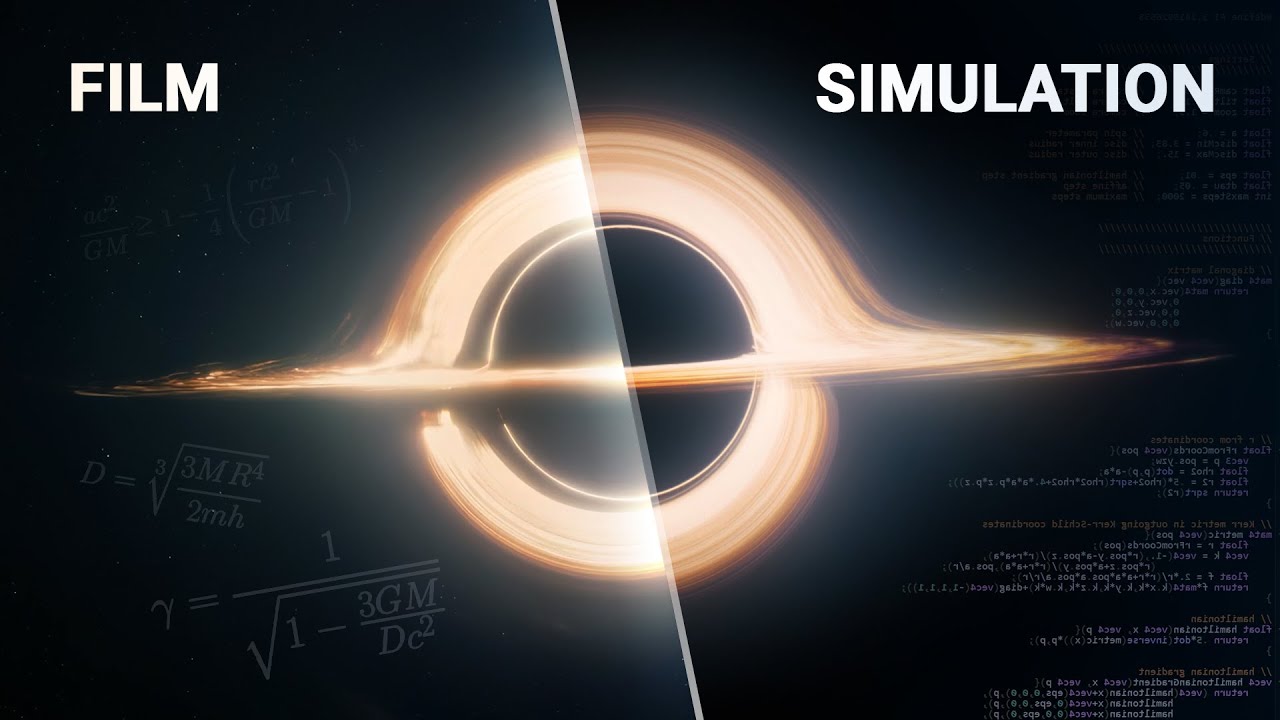

sounds pretty much the same as in interstellar wormhole method:

I mean, we can do all of that and pretty much anything else in CPU land, as long as we have a couple of minutes per frame budget to create the video, but for realtime shit one has to massively cut the corners.

I had to think of the WebGPU - Compute example. It use the GPU to calculate geometry (position of 300000 moving particles). GPUs meanwhile have so many cores.

Up to which part can implement something like a simulation of a wormhole, I don’t know. I know way to few about WebGPU for that.

It sounds like a very interesting option though. I hope to learn more about the WebGPURenderer as soon as possible because I need it for the ocean project.

But we can make easily performance estimates with the WeBGPU - Compute example by increasing the number of particles until it starts to jerk. Or use a GPU meter to measure how much the GPU is being used. The GPU of my tablet is already well exhausted in the example. My Readon 7900XTX hardly registers that.

Being able to shift computationally intensive tasks to the GPU was a very good idea from 3js

Hi abernier,

it’s been a while. This reminds me of my old homepage ![]()

I’m a physicist and curved space-time was one of the main reasons for my studies. I had already toyed with the idea of starting it, but I just don’t have the time. I’m working on three different projects beside my work.

I see in the screenshot that this is done in the shader. That’s what we had at the time. The problem was that curved light rays had to be calculated back to the source. That would really appeal to me with WebGPU.

the author Alessandro Roussel of the last video has shared his shadertoy impl Shader - Shadertoy BETA of his wormhole simulation

This is a nice shader toy example. However, only light that is emitted from the front of the objects is bent. For objects that would be closer, like these rods that go through the wormhole that we see in the video above, this doesn’t work.

But me and makc3d may have thought too much about details.

The Shadertoy example uses two cube textures for this. You want to use this in a 3D world.

You can render cube textures with the cuberender target, it would be quite easy. This means you have two different locations in the scene and you set the entry position of the wormhole in both locations. There must then be a cube camera at each of these wormhole entry positions, which renders the environment in each frame into a cuberender target. Then you could implement it the same way with postprocessing and have it dynamically in 3D space.

I’m just wondering whether in post-processing you only need the cube texture of the target location on the other side of the wormhole, because on your own side it’s the observer’s perspective that counts, i.e. the view camera.

Fortunately, rendering cube textures is a simple matter.

I did what I described. I mean two cube geometries in different locations with different cubemaps. Then I placed the scene camera in one of the box geometries and the cube camera in the other. The cube camera records everything that is within the other box geometry. I assign what the cube camera now renders to a sphere geometry which is in the box in which the scene camera is. So you can see everything on the sphere that the cube camera sees in the other box. And when you move around the sphere, the perspective changes accordingly. So exactly as it should be.

If I were to place other geometries in the box with the cube camera, then the cube camera would also capture them. So you could see through the wormhole what is happening on the other side.

The spherical geometry itself wouldn’t even be necessary.

Because the essential thing for the effect happens in postprocessing. This means that I have to pass what the cube camera renders to the postprocessing shader as well as the position of the scene camera and the rendered scene without the sphere geometry. Then I could reproduce the effect you showed from Shadertoy in the postprocessing shader.

The only problem now is that I’m not very familiar with postprocessing in webgpu. I can set up postprocessing and it works. Now I just need the option of using my own shader for postprocessing and unfortunately I don’t know how to do that yet, since the two webgpu postprocessing examples only use ready-made postprocessing functions but no custom shader.

Maybe an extension for webgpu would be necessary here, I don’t know.

However, with webgl2 this should be completely feasible.