I have a quick question since my understanding of instancing is not aligned with what I’m seeing happen.

Some background, I have been using canvases as the underlying source for sprite text labels, and this works really well (very fast, .01ms per sprite draw time), but for each sprite I have a draw call, and also an additional megabyte of THREE texture memory being allocated. The memory usage is constant, and regardless of the underlying canvas size, I always see an additional meg used although I don’t understand why, but that is not my main question.

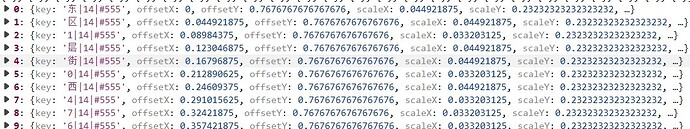

To reduce memory consumption and the number of draw calls, I figured I’d use an InstancedMesh (some code I found explained it well), but now that I have a single canvas with different labels inside I am doing UV mapping for each vertex, which works, except that it seems the UV mappings for the last 4 sprite vertices is applied to all instanced sprites, which is not what I’d expect. Nothing in the code below is creating an error, but I don’t understand how the UV mapping works in this scenario.

My question is why do the UV mappings in my last 4 vertices apply to all instances below?

let geometry = new THREE.InstancedBufferGeometry();

// 4 vertices per sprite (figured to just do the raw UV mapping directly on each point below)

let positions = new Float32Array(10 * 3 * 4);

let uvs = new Float32Array(10 * 2 * 4);

// this is copied from the sprite code in three, and it positions on the screen fine

// to simplify this, imagine a texture that is 100x100

// and each new labels starts at x = 0, y = (i * 10) (so a column of 10 rows)

for (let i = 0, j = 0, k = 0; i < 10; ++i) {

// x1, y1, x2, y2, are the texture cutouts for each sprite

let x1, x2, y1, y2;

// to keep it really explicit, only the first sprite has a unique UV cutout

// the other 9 would be the same in this example

if (i == 0) {

x1 = 0;

x2 = 1;

y1 = 0;

y2 = .1;

// i = 1 to 9 are all what should be the second text label

} else {

x1 = 0;

x2 = 1;

y1 = .1;

y2 = .2;

}

// so this is the issue above

// expected the first sprite to be the first label

// and all the rest would be the second label

// except the first sprite is affected by the else block above

// so all ten labels have the label of sprite 2

// or whichever x1, y1, x2, y2 is last set

// BL

positions[j++] = -.5;

positions[j++] = -.5;

positions[j++] = 0;

uvs[k++] = x1;

uvs[k++] = y1;

// BR

positions[j++] = .5;

positions[j++] = -.5;

positions[j++] = 0;

uvs[k++] = x2;

uvs[k++] = y1;

// TR

positions[j++] = .5;

positions[j++] = .5;

positions[j++] = 0;

uvs[k++] = x2;

uvs[k++] = y2;

// TL

positions[j++] = -.5;

positions[j++] = .5;

positions[j++] = 0;

uvs[k++] = x1;

uvs[k++] = y2;

}

geometry.setAttribute('position', new THREE.BufferAttribute(positions, 3));

geometry.setAttribute('uv', new THREE.BufferAttribute(uvs, 2));

// position and scale set on an Object3D here, then setMatrixAt() is called

// this all works fine

for (let i = 0; i < text3s.length; ++i) {

...

}

// mesh is created with a custom shader material that uses a custom vertex and fragment shader

// these shaders are basically the same as the ones for a sprite

// only modification is to use modelViewMatrix * instanceMatrix in the vertex shader

// which is properly setting the position of each sprite

// no issues in these shaders, everything looks as expected

let mesh = new THREE.InstancedMesh(...)

My guess is that because each vertex has the same position in the array buffer, that is somehow affecting the UV mapping, but since each sprite is correctly positioned, I don’t understand why the texture offsets seem to be stepping onto each other.