I was messing about with NVidia’s SHaRC in Shade, and thought I’d share my thoughts.

SHaRC is a very catching name, it stands for Spatially Hashed Radiance Cache. The idea is to use a fixed amount of memory, like with just about every cache, to store radiance information. In case of SHaRC - it’s a just a single color value with some metadata, the cache is view-dependent.

I liked the idea of spatial hashing, but wasn’t thrilled about the view-dependent part. So my implementation records irradiance in SH2 form instead.

I didn’t like the “resolve + compact” steps, so I replaced them with a fairly traditional eviction mechanism instead. When we write to the cache, if no cell can be found to write to - we add hash key to an eviction requestion stream. In a separate indirect dispatch - we perform eviction.

SHaRC’s implementation splits the hash table into “buckets” of 32 elements, which makes the whole eviction mechanism easier, as we only need to consider 32 candidates max. Anyway, it works well for me and allows data to be retained pretty much indefinitely unlike NVidia’s version.

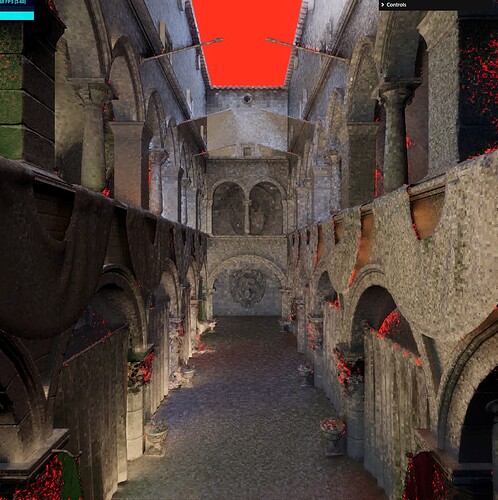

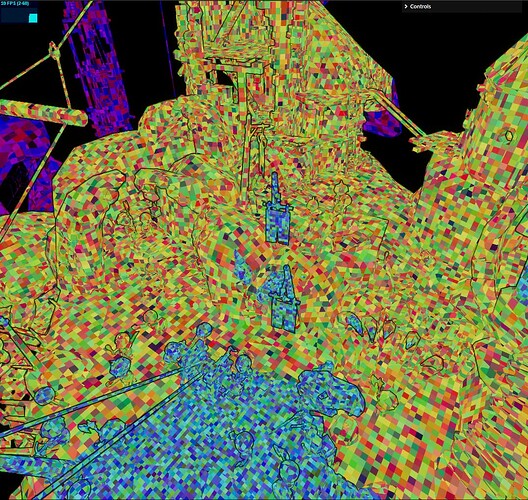

Here are some pictures of my implementation, showing debug view of recorded irradiance ad hash cells

The irradiance is obtained from the SSR pass, and we perform temporal accumulation for noise reduction.

For comparison, here’s a version without temporal accumulation:

Take my word for it - it does a lot.

I don’t have anything specific to share here really, except for the fact that it SHaRC-like technique can be done in WebGPU and it works very well.

It’s way more useful for an actual full path-tracer than just as an addition to a screen-space technique.

The cool thing is that it strikes a really nice balance between resolution, memory usage, performance and ease of implementation. My implementation uses the recommended 2^22 ( 4,194,304 ) cells, and it’s enough for about 2 full views of moderate complexity in my tests, you can mess around with resolution scale to get more/less out of it.

Here are a some more screenshots in no particular order

The red is used to represent cache misses - i.e. there is no data in the cache for those areas.

For reference, here are color renders of the scenes above

Possible Applications

- Pre-build a cache with a path tracer, and then ship it along with the scene, then use SSR-like shader but actually sample from the cache to get global illumination.

- Use the cache to aid SSR with high-roughness surfaces, since we can sample cache at different cell resolution, allowing something akin to cone tracing.

- Run simplified scene representation with full ray tracing to populate the cache at run-time, and use it just for diffuse global illumination, where detail is less visible anyway.

I love the results, but yeah - I’m not sure what to use this for ![]()

Why do this?

Multiple reasons. I read about SHaRC’s existence a while ago, just after it was released. Before that - I messed around with spatial hashed, close to a decade ago.

A couple of years back I studied AMD’s GI solution called Brixelizer, which is a pretty cool piece of tech that does something similar, it doesn’t use a spatial hash, but it records irradiance from screen-space data for later ray tracing. Thinking about SHaRC, it reminded me of the Brixelizer and I thought to myself “why not give it a try?”

Recently Machine Games released Indiana Jones game, and in a few of their tech presentations they mentioned integrating NVidia tech, which was later on actually integrated in idTech’s “Doom: The Dark Ages”, and according to the guys from idTech it was one of the biggest improvements to the overall visual fidelity, so it got me extra-curious in the technique.

A few weeks back I was working on an unrelated problem that could be traditionally solved using a hash map, but the problem was GPU-related. I thought to myself:

“well, I don’t see another way, but it seems like a dumb idea, since hash maps has random access patterns, surely it’s not suited to the GPU?..”

Did some research, implemented the hash map - perf was excellent. Research said that it’s a pretty good technique on the GPU as well, so it got me thinking of the SHaRC again.