I tried to convert the Marine JSON model from prior library revisions using the legacythree2gltf converter:

marine.zip (2.1 MB)

The first issue I had was that while the JSON file is loaded via readFileSync, the texture is loaded via a regular Three.js TextureLoader. I think because of that running ...three.js\utils\converters>node .\legacythree2gltf.js .\marine.json just ended its work witout any output. I added some console.log calls and the execution went to LegacyJSONLoader: THREE.Loader.prototype.initMaterials -> Loader: this.createMaterial( materials[ i ] ... ) -> json.map = loadTexture(...) -> texture = _textureLoader.load( fullPath ) -> TextureLoader: loader.load( url ...) and stopped. I feel like there should be some error, but I did not see anything in my console.

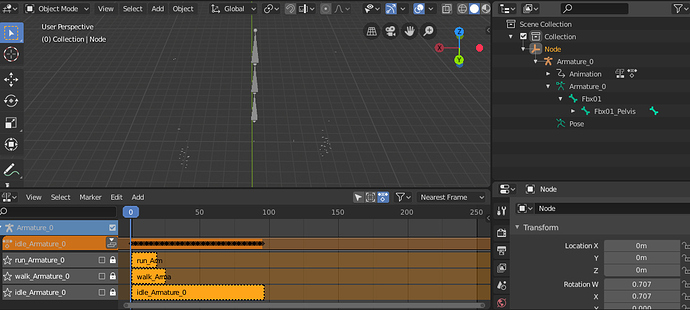

Then I commented out the materials loading from LegacyJSONLoader and the process completed with a GLTF file. Unfortunately, when I import it to Blender, all I have is the skeleton and the animations but not the mesh (or materials, which I expected not to be there).

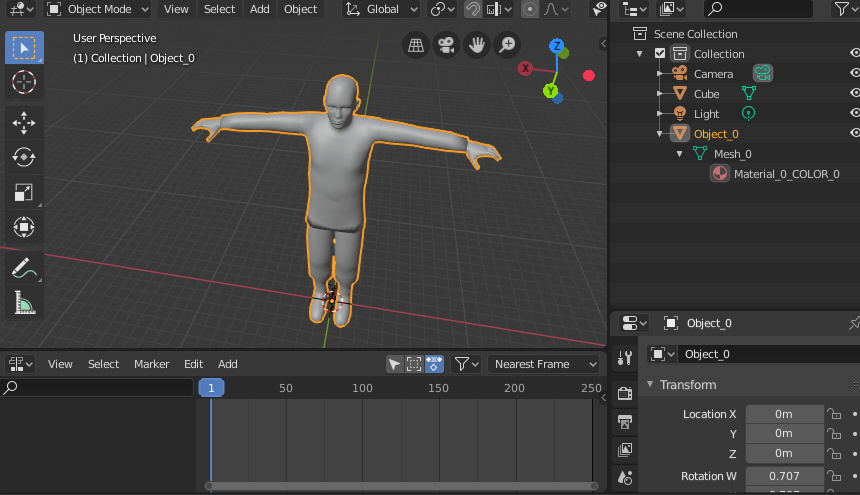

With the Online GLTF converter I get the model, but no animations, skeleton or the materials.

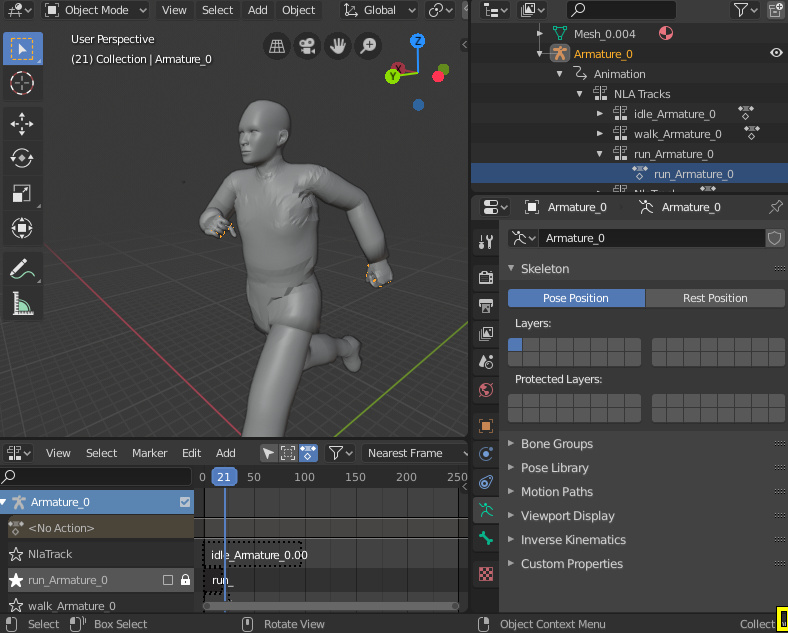

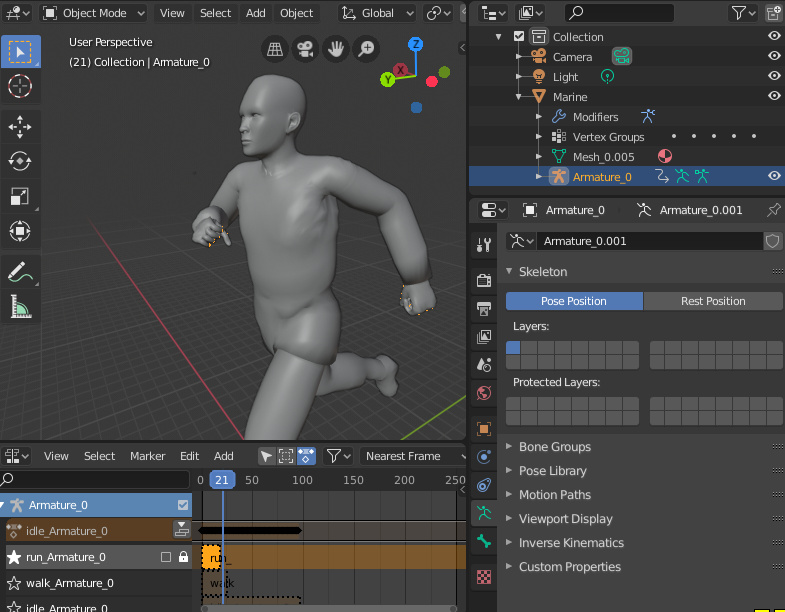

How could I get the Marine into the new GLTF format such that it would have: mesh, textured material, skeleton and the animations (idle, walk and run)?