Wow. How does that work? You simply give DeespSeek a prompt: “write a cool shader for me”?

Yeah basically, but I have to provide a Bunch of instructions and context, to help keep it from going off the rails. It fails to produce working code about 50% of the time, but… when they work, they’re usually pretty cool. I tried having it debug its own code when they are broken, but its often faster to just regenerate. It takes about 10 seconds.

I give it access to some function like hsv2rgb, and noise, and it uses them.

Simple prompts are pretty reliable like:

“all red”

or “green circle”

but even with “checkerboard” it starts to get creative…

and soon the prompts don’t resemble the shader except in abstract ways.

It’s really fascinating…

Awesome. Would love to have a go as well. I need more time! ![]()

im using ollama and deepseek-r1:32b

deepseek-r1:14b also works but only about 30% of the time.

Wow, there’s no latency, even after you added 50 blocks. The fractals are most interesting… I thought they require iterations (raster), but these have fidelity to a subpixel “thread” (vector?)! There is banding, but as far as I zoom the fractal quality is lossless. I suppose I could Google Mandelbrots in general… but your radial bursts have a pixel seam. ![]() I’m struggling to appreciate the quality and performance.

I’m struggling to appreciate the quality and performance.

yeah… the template im giving it is just a list of available functions / constants,

and:

vec4 Effect2( vec2 uv ){

}

When it creates a shader that crashes… I just have it generate a new one.

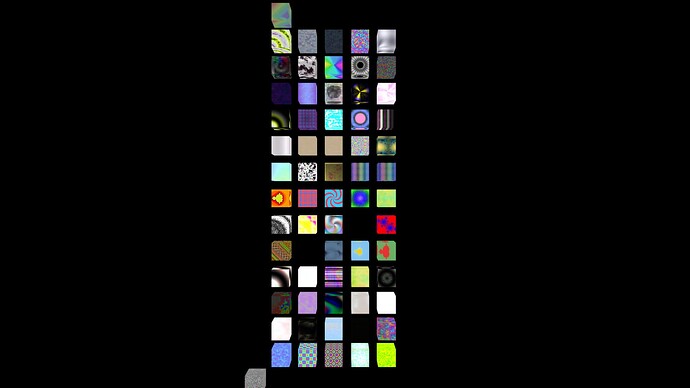

Its getting unwieldy tho. I’m up to about 500 different shaders and the gallery is getting really chuggy. ![]()

I have to do some visibility culling or smth.

Looks great, I like the concept and some of these are pretty funky!

It’s however a pain navigating around, OrbitControls aren’t ideal for this kind of scene - it would be much nicer with a camera with a controllable position and fixed rotation.

on it boss. ![]()

It’s fantastic - really very promising stuff !

This is the best - reminds me Miro artworks - how it was produced ?

I congratulate @manthrax for having triggered a valuable discussion on this topic. I also don’t begrudge him the attention this thread enjoys.

That said, I do think that this is a typical case of “solution seeking for a problem”. In other words: nothing more than a random noise generator. From the various answers this thread has generated I acknowledge, that certain results deem interesting to some. But so does every other thread on this forum.

To discern “interesting” from “dull” shader proposals still requires human intelligence, imo.

Well designed and precisely controlled noise generator is always demanded.

For example, I like powerfull noise system of Cinema 4D and use it to generate following planets for my future game - “Entropy”:

It will be nice to have a good sets of similar shaders to choose from.

Also sometimes random patterns can help to wake up artist’s fantazy.

I changed how the camera controls work. Dunno if its an improvement, but at least you can see the effect source and download the ones you like now.

The red/blue fractal code is here:

//prompt: a fractal

vec4 Effect2(vec2 uv) {

const float max_iter = 100.0;

// Initialize complex number z and constant c

vec2 z = (2.0 * uv - 1.0);

vec2 c = vec2(-0.4, 0.6); // Julia set constant

float iter = 0.0;

while(iter < max_iter) {

// Complex multiplication and addition: z^2 + c

z = vec2(z.x*z.x - z.y*z.y, 2.0 * z.x*z.y) + c;

if(length(z) > 2.0) break;

iter += 1.0;

}

// Color based on iteration count

float t = iter / max_iter;

vec3 color = mix(vec3(1.0, 0.0, 0.0), vec3(0.0, 0.0, 1.0), t);

return vec4(color, 1.0);

}

Tragically I changed the noise texture being used, and that one seems to have changed and isn’t recognizable anymore… so I think it was using the noise texture as an input. If I find it again, i’ll send you the shader.

I added the ability to click them and see the source, and also download a standalone version of the effect if u want it…

data_734.html (8.3 KB)

I think it was this one, but it mutated when I switched the noise texture to be generated at runtime (to enable standalone)

data_106.html (7.5 KB)

let effect2=`vec4 Effect2(vec2 uv) {

vec2 pos = uv * 4.0 - 1.0;

float time = iTime * 0.5;

int max_steps = 100;

int steps = 0;

float trap = 0.1;

vec3 color = vec3(0.0);

while (steps < max_steps) {

pos.x += sin(time + pos.y * 2.0) * 0.5;

pos.y += cos(time - pos.x * 2.0) * 0.5;

float noise_val = noise(vec3(pos, time));

pos *= 1.0 + noise_val * 0.2;

if (length(pos) < trap) {

color = hsl(float(steps)/float(max_steps), 1.0, 0.5);

break;

}

steps++;

}

return vec4(color, 1.0);

}`;

Thanks a lot !

This is not a tragedy, but a gift - now I have a lot of space for experiments.

Source code window with download is very useful.

Another idea - if you have a plan to increase the amount of effects,

then sorting them by categories or maybe tagging somehow will be a great help

in navigation and searching.

Good luck in DeepSeek-ing !

100% yeah tagging would be really good to make a maintainable library.

Sorry, maybe I am too Intrusive, but this project intrigues me.

Didn’t you think about a function to generate a set of shaders similar to selected ?

I am not sure if it’s possible, but if we will have some measure describing “similarity”

then it will be possible to request "something completely similar " :).

Would it be feasible for AI to tag automatically the shaders?