I am working on a feature where I can switch between WebGLRenderer and RaytracingRenderer. So far it is working, but with a few issues:

- The intensity of lights has to be incredibly high, which means that my lighting looks quite a bit different in one renderer than the other.

- My PlaneBufferGeometry, which I displace using it’s position buffer, shows up fine in the WebGLRenderer, but displays as a flat plane in the RaytracingRenderer.

This is how I’m displacing the mesh. I’ll be happy to provide more code, but it’s all becoming hard to separate from the larger class around it, so I’ve pulled out what I imagine would be most helpful.

initGeometry(v) {

this.geometry = new THREE.PlaneBufferGeometry(

v,

v,

this.state.detail,

this.state.detail

);

this.initializeMesh();

this.displaceGeometry();

}

initializeMesh() {

this.material = new THREE.MeshNormalMaterial();

var mirrorMaterialSmooth = new THREE.MeshPhongMaterial({

color: 0xffaa00,

specular: 0x222222,

shininess: 10000,

vertexColors: THREE.NoColors,

flatShading: false

});

mirrorMaterialSmooth.mirror = true;

mirrorMaterialSmooth.reflectivity = 0.3;

this.mesh = new THREE.Mesh(this.geometry, mirrorMaterialSmooth);

this.scene.add(this.mesh);

}

displaceGeometry() {

const displacement_buffer = this.elevation.getBufferArray();

const positions = this.geometry.getAttribute('position').array;

const uvs = this.geometry.getAttribute('uv').array;

const count = this.geometry.getAttribute('position').count;

for (let i = 0; i < count; i++) {

const u = uvs[i * 2];

const v = uvs[i * 2 + 1];

const x = Math.floor(u * (this.width - 1.0));

const y = Math.floor(v * (this.height - 1.0));

const d_index = (y * this.height + x) * 4;

let r = displacement_buffer[d_index];

positions[i * 3 + 2] = (r * this.amplitude);

}

this.geometry.getAttribute('normal').needsUpdate = true;

this.geometry.getAttribute('position').needsUpdate = true;

this.geometry.getAttribute('uv').needsUpdate = true;

this.geometry.computeVertexNormals();

this.geometry.computeFaceNormals();

this.geometry.computeBoundingBox();

this.geometry.computeBoundingSphere();

this.geometry.translate(0, 0, -this.geometry.boundingBox.min.z);

}

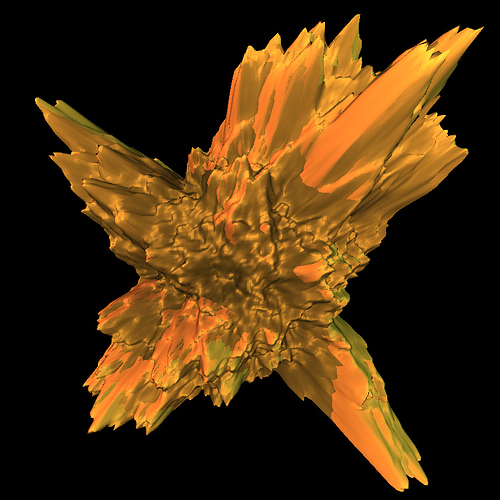

Displaced test mesh

Same mesh through RaytracingRenderer