Thanks ![]()

![]()

I have but it didn’t explain what is still eluding me.

- It mostly addresses the ancient practice of actually writing logic and JS using eval. Of course its slower to execute a function in a loop with

eval()every time, or access properties. But is it still used in some way? I need to research this but my guess is that it could be present when using something likeimport()and lazy loading code. Even besides this, if I only run it a few times to generate these functions, does it really impact performance, ie does any of this apply? - security. I have very very limited knowledge on this topic. I imagine that if some client code requests say a json from the server, and

eval()s that, it can be very dangerous. But what happens when you download some client code, which runseval()only once during app load?

Is it as dangerous to have some inline code that evals some hardcoded function like this and just appends a variable at the top?

I don’t really care about eval() if there is something that does the exact same thing and is considered less dangerous but i wasn’t able to get the Function() syntax to do the same thing, or to do anything for that matter. I didn’t understand how to write it.

My concern is that there is a system that is in my view bugged (looks like it has been encountered by others) and that can be solved by eval() or by a complete work around. It makes me very sad that this seems to be taken at face value as a limitation, when there are very simple and robust fixes for it.

There were some recent PRs that change the way onBeforeCompile is cloned, which if it happens now may make eval() actually more dangerous, (can it somehow end up in a loader?). I want to fully understand the problem, and the proposed solutions. Hence the reason for being a bit vocal on this topic ![]()

On the topic of making onBeforeCompile actually work, what about overriding the toString() of the function itself? I never did something like that but it’s going to be my next shot.

Not that one, this is a very simple one:

I would like to ask you to personally revisit this since it looks like a constructive discussion is starting to happen here ![]()

It’s a few lines of code compared to a complete refactor of the material system, and in my view it just happens to be related to glsl extensions - it actually addresses the issues of three.js being “locked in” more generally.

In my view it’s the same issue as:

Both have the pattern

//inside WebGLRenderer

const _someGLThing = _somePrivateClass.getStuff( somethingFromOutside )

My proposal is super simple:

const _someGLThing = somethingFromOutside.stuff || _somePrivateClass.getStuff( somethingFromOutside)

Nothing more than that.

The material refactor idea relates more to the issue of having a shader graph system. I think my idea is very simple but im having a really hard time explaining it.

I have certain assumptions:

- I have never seen a shader graph without a visual representation

- To present the graph you might need a fairly sophisticated rendering layer, nodes, edges, text

- To efficiently make the graph you need a visual tool. Something where you click on an output of one node, and link to the input of another. Where you can move nodes around, create and delete them etc.

- I imagine you never see code as you can find in the nodes example of three.js. No one is ever going to call

new Node()“manually” *. - At the end of the day, OpenGL/Webgl has to be provided with a properly formatted valid GLSL string, that has attributes, uniforms, varyings and gl_

I imagine some kind of a web based tool that renders the shader graph using the Canvas, or React, or something like that. Makes sense for sketchfab since they’re an entire platform not just the engine? I don’t use three’s editor, but i imagine that such a tool would be a sibling of that.

From chapter 12:

This approach allows us to retain a library of reusable shader nodes (see Table 12.1), which are independent of the final renderer type.

I think this reinforces my case, but there are things that are not clear about “moving nodes to src”. I wish there was more discussion around this.

Nonetheless, thanks to complex nodes that use functions rather than inline code (see computeSunLightShading in Listing 12.4), the resulting code is still surprisingly

straightforward and readable.

This was a concern to them. I interpret this as whatever.toShaderMaterial() or whatever.generateGLSLandUniforms() after which you get readable GLSL.

I think new Node( new OtherNode ( new YetAnotherNode( ... should not be written manually, as it feels that its just extra syntax over GLSL. Connecting these three and generating a JSON graph is a whole different thing, but then this kind of code is reserved for some kind of a loader.

OK, I’ll take a look at your PR when I have a bit of time.

Regarding eval(), I don’t think it’s that useful to discuss it further here since it’s not three.js related. You would be better off asking on JavaScript boards for more info, or there’s literally thousands of places around the web discussing it. I was just giving my opinion that if you submit PRs or suggest solutions that use eval(), they’re going to get rejected so you would be better off looking for other solutions.

Thank you!

I agree that submitting a PR for three.js with an eval would probably be a bad idea. However, submitting a use case "this only can work with eval() could indirectly influence the decision to copy the handlers, which in my view could be a good thing. Rather than taking articles such as the one above at face value, my ask (not demand) is for more people to:

![]()

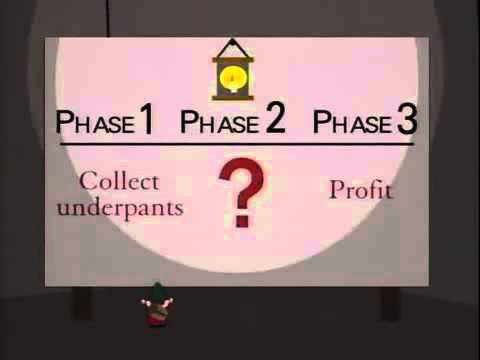

If you’re a fan of southpark:

I’m trying to figure out step 2 ![]()

Ok, I don’t mean to reject your PR out of hand and it probably contains a useful and elegant solution. But @mrdoob has expressed his opinion on it here

The design of the current material system is hacky and hard to maintain. I would rather not add things that make it even harder to maintain on top. I would, instead, focus on exploring

NodeMaterial.

Personally, I agree with this - it’s basically the same sentiment I’ve been expressing in my comments above. I think that NodeMaterial offers a considerable improvement over the current system and we should be focussing our efforts on that rather than trying to improve the current system.

Any work beyond bug fixes that we put into the current material system will be wasted effort when we switch to the new material system.

I know what has already been stated in there, but i’ve seen others run into bugs. The work is really minimal:

Instead of

const foo = getFoo(bar)

do:

const foo = bar.foo || getFoo(bar)

The pattern is present in three not just in the material system so NodeMaterial won’t fix all of these issues. It’s a one liner for the other issues, its several lines for the material system. The effort has already been put years ago, the switch to the material system is still uncertain (at least in timeline). Hard to fix bugs if they are deemed features

Shoot, i thought i was close this time

How to update uniforms and attributes with .onBeforeCompile() ?

P.S. Ah here solution:

var uId = { value : 1 };

var uDt = { value : 0 };

material.onBeforeCompile = function ( shader ) {

shader.uniforms.uId = uId;

shader.uniforms.uDt = uDt;

//...

}

//update

uId.value = 123;

uDt.value = performance.now();