First some pictures

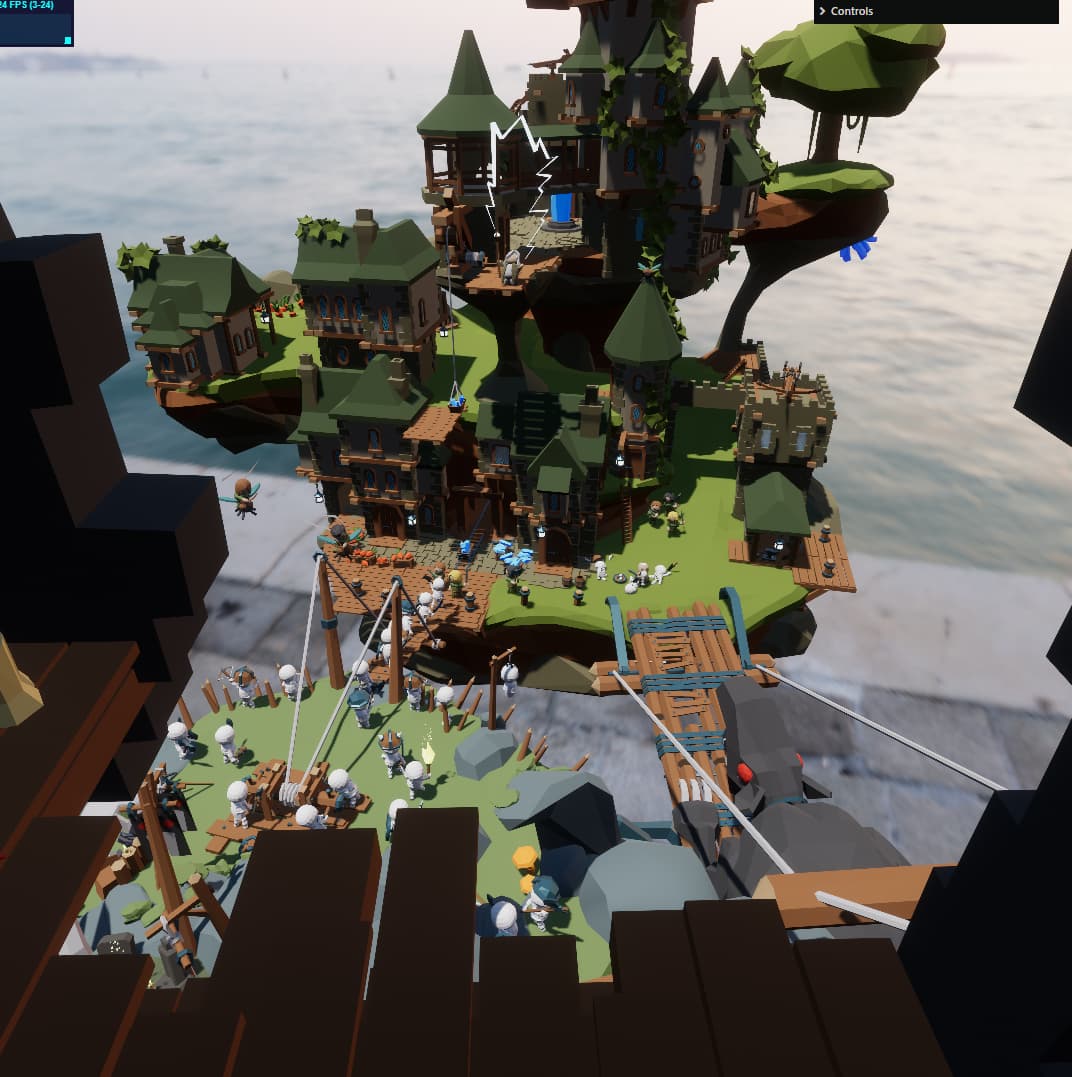

Last one is a Live Demo.

I’ve been a bit stuck on shadows while working on Shade. I didn’t want to go with traditional shadowmaps, as they require parameter tweaking. I wanted a turn-key solution, where the user/developer doesn’t need to consider anything beyond

“do I want shadows?”

Initially I thought about CSM (Cascaded Shadow Maps), but I dismissed them because as wonderful as they are - they have a bunch of drawbacks. Namely - you need to rasterize a lot of pixels, which can be memory-heavy, and depending on your scene complexity - you’re potentially vertex-shader-bound. Lastly - CSM does have a weakness that it’s still resolution-dependent, it’s still close-enough to traditional shadowmapping that you need to pick resolution for each cascade and how many cascades you want.

Next, I looked into Virtual Shadow Maps, pioneered by the Assassins Creed in 2010s, in recent years Unreal brought them back into the mainstream where they exploit their software rasterizer along with Nanite to make them a very compelling solution.

With VSM (Virtual Shadow Maps) I ran into the issue that WebGPU lacks multi-draw, and each tile of the shadow map is a separate rasterization process. For a single simple shot we might have close to a hundred such tiles. So VSM is a no-go as it currently stands. I thought about various hacks to circumvent this, but ultimately decided against it. VSM has a weird limitation still, in that it requires you specify overall shadow bounds, like like with a traditional shadow map. With the resolution in the neighbourhood of 160,000 x 160,000 pixels - it’s not a major limitation, but it’s still there.

Next, I went for ray traced shadows, and you can see a few demos in the Shade topic with RTX shadows. RTX shadows, I believe will replace shadow maps entirely at some point, as they are better in every respect except for compute requirements. But we’re not there yet. RTX is not supported natively in WebGPU, and my implementation didn’t run fast enough for my liking.

So, coming back full circle - I recently watched a presentation on “Tiny Glade” by Tom Stochastic. And Tom used CSM to a great effect. Then I remembered the "Alan Wake 2" presentation from REAC 2024, and how they mitigates some of the issues with CSM as well; I decided to give cascaded shadow maps a go.

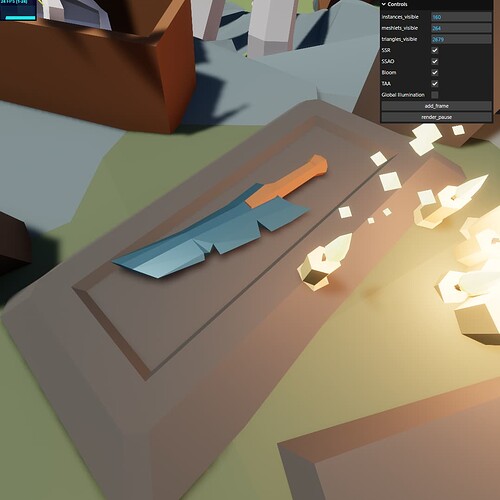

My basic plan was to achieve similar tech solution to Tiny Glade, that is - CSM with TAA per-cascade and PCSS contact hardening.

I already had the rasterization pipeline with occlusion culling, and I did the same as the Northlight guys (Alan Wake 2), by reusing that pipeline to render CSM cascades. I dabbled a bit with PCSS, and I’m pretty happy with the results, but there are a number of issues to resolve before it can be used in production. TAA part is not there either.

That said, my implementation is a little unique:

- I sample the nearest available cascade, instead of going purely by depth, as do most solutions. It might sound silly, but if you have higher resolution shadowmap available - why sample the lower res one? In my experience doing this you get ~20% shadowed pixels on the screen sampling from higher resolution cascade, which is a massive win in my book. Almost like getting 20% extra resolution for free.

- Most solution out there create square frustum for each cascade, using the widest dimension of the frustum slice’s bounds. Many implementations I’ve seen even take the hypotenuse, which is even larger. This eliminates pretty much any possibility that there will be lack of coverage for any cascade. However, you sacrifice resolution. Again, in my experience, frustums can be much tighter, often 1/4 of the area of the conservative approach. Which means you can get, again, about double the resolution for your shadows. I use tightest bounds possible.

- Scabilization. I used to think that stabilization is very important, but after reading Matt Pettineo’s musings on CSMs, I was struck by the following quote:

2 years ago Andrew Lauritzen gave a talk on what he called “Sample Distribution Shadow Maps”, and released the sample that I mentioned earlier. He proposed that instead of stabilizing the cascades, we could instead focus on reducing wasted resolution in the shadow map to a point where effective resolution is high enough to give us sub-pixel resolution when sampling the shadow map.

Thinking about it in a different way - if you have a wide-enough filter, you get the temporally stable results. So, I don’t stabilize cascades. Going beyond that, with a decent PCSS implementation, the problem becomes even less significant, as your filter size grows. In the current implementation I’m using a Catmull-Rom filter of size 5.

- Instead of using a texture array for CSM, I use an atlas instead. This is not a huge deal really. I just wanted to build for the future, where more than just CSM is supported, and I can draw all shadows into the same atlas for speed of access during shading.

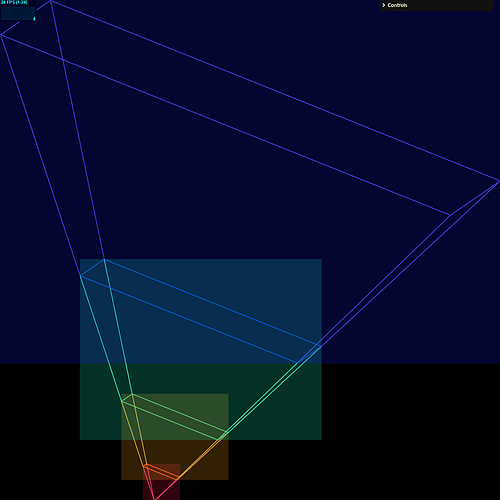

My GPU metadata for CSM looks very simple, something like this:

struct CascadeMetadata{

atlas_patch: vec4<f32>,

projection: mat4x4<f32>

}

var<uniform> csm_metadata: array<CascadeMetadata, CSM_CASCADE_COUNT>

where the CSM_CASCADE_COUNT is a compile-time constant. In the demo it’s 4.

If you check out the Live Demo, please let me know how it runs for you and what hardware/resolution you’ve got.