I took a look at it straight away. In the forum someone recently asked about a blur effect, the new node is a better solution than the old way.

I see in both examples you use a geometry for this. I imagine that a PostFXNode would be quite good with addable materials. This PostFXNode would then always be fullscreen so that you don’t need to create any geometries yourself. I also think it’s good that you don’t have to have a composer render after the scene has been rendered. I think the post-processing setup in webgl has stopped many people from ever having to deal with it. The nodes are much easier to care for.

Another reason for a PostFXNode or ComposerNode would be the easy stackability of post-processing effects.

PostFXNode.add(myFirstPostEffectMaterial);

PostFXNode.add(mySecondPostEffectMaterial);

PostFXNode.add(myThirdPostEffectMaterial);

scene.add(PostFXNode);

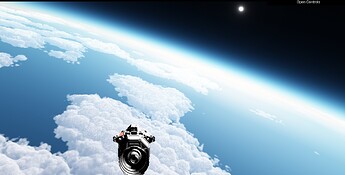

One of my first attempts where I dealt with it. For the clouds I had to greatly reduce the ray tracing depth so that it would run on my tablet, hence the poor quality of the clouds (to few integration steps).

What I’m getting at is, I first use a cloud post-processing and because clouds are also influenced by the atmosphere, I use atmospheric post-processing on top of that. I would have later written a particle emitter for the engine, but I thought another post-processing shader would look better. I haven’t done any cloud shadows yet either. But that would be part of the cloud shader.

On the horizon you can clearly see how the atmosphere strongly scatters the light from the clouds so that they can hardly be seen

Same here, you can clearly see the overlay effect of the two post-processing shaders.

By the way, that’s also the reason why I started with an ocean. Just a boring blue surface is not nice.

On my desktop with my XTX 7900 Readon I can render the clouds with excellent quality, but the trick is to be able to get by with little resources. I can fly through the clouds and I really like the effect.

I don’t know whether a separate material class would be practical for this. My post-processing fragment shaders are very extensive and complex but the vertex shader is always very simple

export const atmosphereVS =`

out vec2 vUv;

void main() {

vUv = uv;

gl_Position = vec4( (uv - 0.5)*2.0, 0.0, 1.0 );

}

`;

That’s why I always got the warning about the missing normal attribute with the MeshBasicNodeMaterial when I used it with the composer because the MeshBasicNodeMaterial has a normal attribute, but the composer doesn’t offer it because it’s not necessary in postprocessing.

The stackability and the fact that no geometry was needed are good features of the composer. I think it’s worth adopting.

I hope you don’t mind if I often go into a little more detail. Three.js offers very extensive possibilities and I’m probably one of the few people who uses it so extensively. But it’s all these powerful tools that I find so exciting about three.js.